Counting poker hands

April 11, 2024 at 12:13 PM by Dr. Drang

In this morning’s blog post, John D. Cook talks about poker hands and their probabilities. In particular, he says

For a five-card hand, the probabilities of 0, 1, or 2 pair are 0.5012, 0.4226, and 0.0475 respectively.

Upon reading this, I assumed there’d be an explanation of how these probabilities were calculated. But no, he just leaves us hanging. So I sat down and worked them out.

The probabilities are going to be calculated by dividing the number of ways we can get a certain type of hand by the total number of possible hands. So let’s start by working out the denominator. There are 52 cards, so if we deal out 5 cards, there are

possible ways to do it. The symbol that looks sort of like a fraction without the dividing line represents the binomial coefficient,

This is not only the coefficient of binomial expansions, it’s also the number of ways we can choose k items out of n without regard to order, also referred to as the number of combinations.

To figure out the number of one-pair hands, imagine the deck laid out in 13 piles: a pile of aces, a pile of kings, a pile of queens, and so on. We then do the following:

- Pick one of the piles. There are 13 ways to do this.

From that pile, choose 2 cards. There are

ways to do this.

Pick three other piles. There are

ways to do this.

- Pick one card from each of these three piles. Since there are 4 cards in each pile, there are ways to do this.

So the total number of one-pair hands is

and the probability of getting a one-pair hand is

Moving on to two-pair hands, we start with the same 13 piles we had before. We then do the following:

Pick two of the piles:

Pick two cards from each of these piles:

- At this point, any of the remaining 44 cards will give us a two-pair hand.

Therefore, the total is

and the probability of drawing a two-pair hand is

Counting the number of no-pair hands is a little trickier because we have to make sure we don’t mistakenly count better hands, i.e., straights and flushes. Starting again with our 13 piles, we do the following:

Pick 5 piles

Eliminate all the straights from this number. The number of straights is most easily done through simple enumeration:

A–5, 2–6, 3–7, 4–8, 5–9, 6–10, 7–J, 8–Q, 9–K, 10–A

So 10 of our 1,287 possible pile selections will give us a straight. That leaves 1,277 non-straight possibilities.

- Pick one card from each of the selected piles. That gives possibilities.

- Eliminate the flushes from this set of possibilites. There are 4 flushes, leaving 1,020 possibilities.

These steps give us

hands that are worse than one-pair. The probability of getting such a hand is

I kept one more digit in my answers than John did, but they all match.

My 2024 Eclipse

April 9, 2024 at 7:47 PM by Dr. Drang

I drove down to Vincennes, Indiana, yesterday to see the eclipse, and everything was just about perfect—some through planning and some through serendipity. Here’s a brief review.

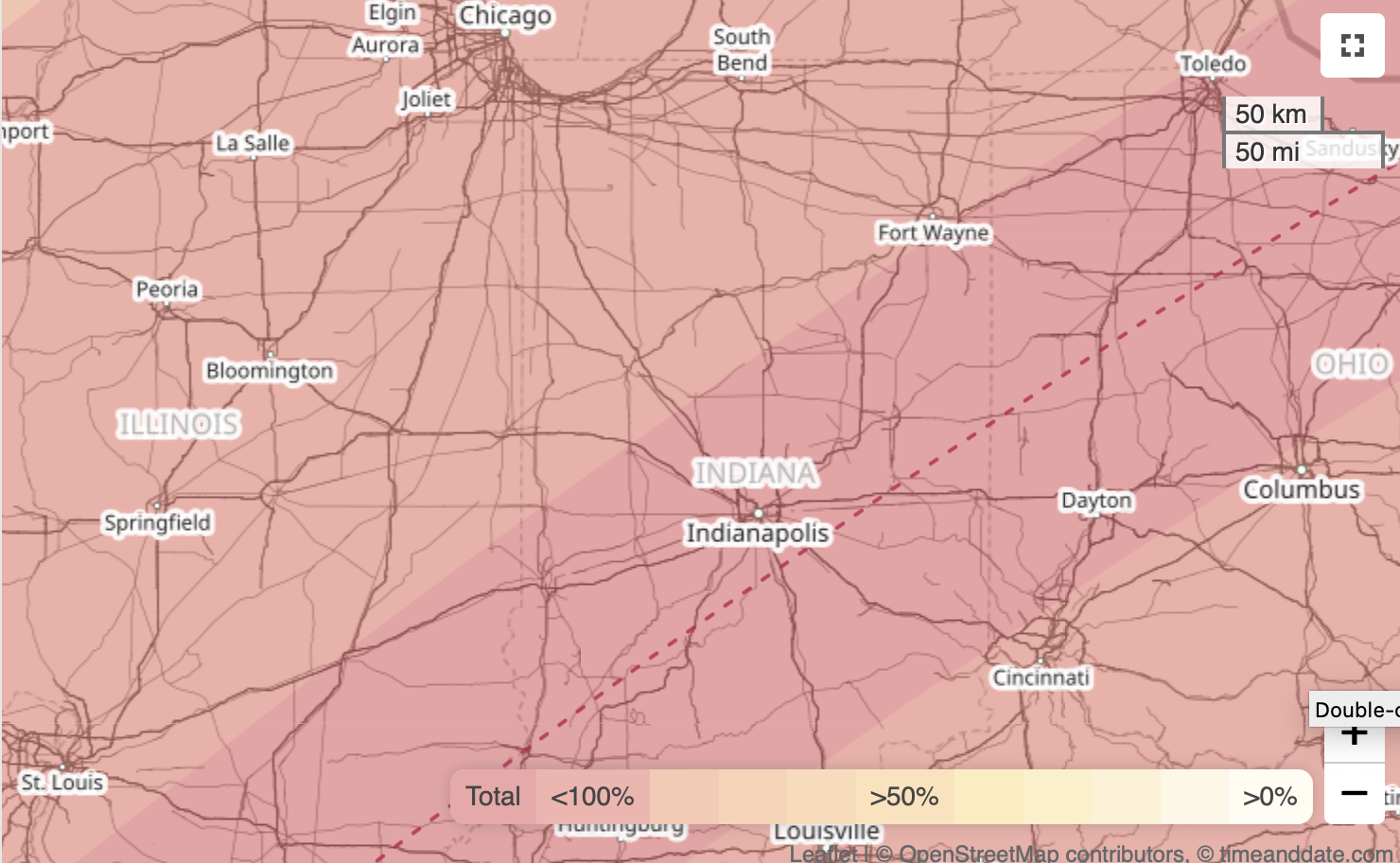

Vincennes was not my first choice. Using the eclipse path map at Time and Date, I looked for the shortest distance from Naperville, Illinois, to the path and figured I’d shoot for a rural area near Indianapolis.

As Eclipse Day got closer, I began to have second thoughts. Two reasons:

- Traffic before and after the 2017 eclipse—which I saw with my wife and sons in southern Illinois—was a nightmare. Going near a decent-sized city ringed by a set of interstate highways seemed like a bad idea.

- Weather reports for the Indianapolis area were a little sketchy. Apple’s Weather app said it would be cloudy in the morning turning to partly cloudy by the time of the eclipse. “Partly cloudy” covers a wide range of cloud cover. Various weather websites also predicted partly cloudy skies, with less cloud cover as you moved southeast along the eclipse path.

So I started looking into Vincennes. It’s about an hour farther than Indianapolis under normal driving conditions, but I could get there via state and US highways instead of interstates, so I figured it was unlikely to attract as many people as the Indianapolis area. And it was supposedly less likely to have clouds.

Ultimately, it was a game-day decision. When I woke up on Monday morning and looked at the forecasts for Indianapolis and Vincennes, Vincennes seemed like it would have slightly better weather. And when I left, sometime between 5:30 and 6:00 in the morning, my route to Vincennes showed no delays. I didn’t truly trust the traffic because I wouldn’t expect people to be crowding the roads until I was two or three hours into my trip.

The drive went very well. I worked my way south and east to US 41 in Indiana (it became an hour later when I crossed the state line) and headed south. As I headed down through the land of windmills, Trump signs, and dollar stores, the traffic was light and the sun was bright. Somewhere—I think it was a bit north of Terre Haute—there was a billboard encouraging everyone to go to Vincennes for the eclipse, but there didn’t seem to be that many people following its directions.

I rolled into Vincennes around 11:30, desperate to use a bathroom. I stopped at a McDonalds and then felt obligated to eat there after using the facilities. Here’s where the serendipity struck. As I was eating (worst fries I’ve ever had at a McDonalds—remind me to tell you about the glorious fries I once had at Hamburger University), I started looking on my iPhone for a park nearby where I could kill some time before the eclipse, which wouldn’t get started for over two hours.

A website describing Kimmel Park on the north side of town, right along the Wabash River, said it had 1.8 miles of walkways. This appealed to me, as I’d missed my morning walk. For no particular reason, I opened the eclipse path page at Time and Date and zoomed in as far as I could. The centerline of the path went right through Kimmel Park.

You see, I had not been planning to stay in Vincennes for the eclipse. It had looked to me as though the path went north of town (which it kind of does), so I was going to find a spot alongside a county road north and east of town, between Vincennes and Bruceville, for watching the eclipse. But now I had a better place, assuming the park wasn’t overrun with visitors.

It wasn’t. There were well-marked lots with free parking and mostly empty spots near the park. And while the park was far from empty, it wasn’t hard to find a place to settle in after I’d walked around it once. (While I’m sure the “1.8 miles of walkway” is accurate, that must be if you take every last branch, possibly doubling back on yourself.)

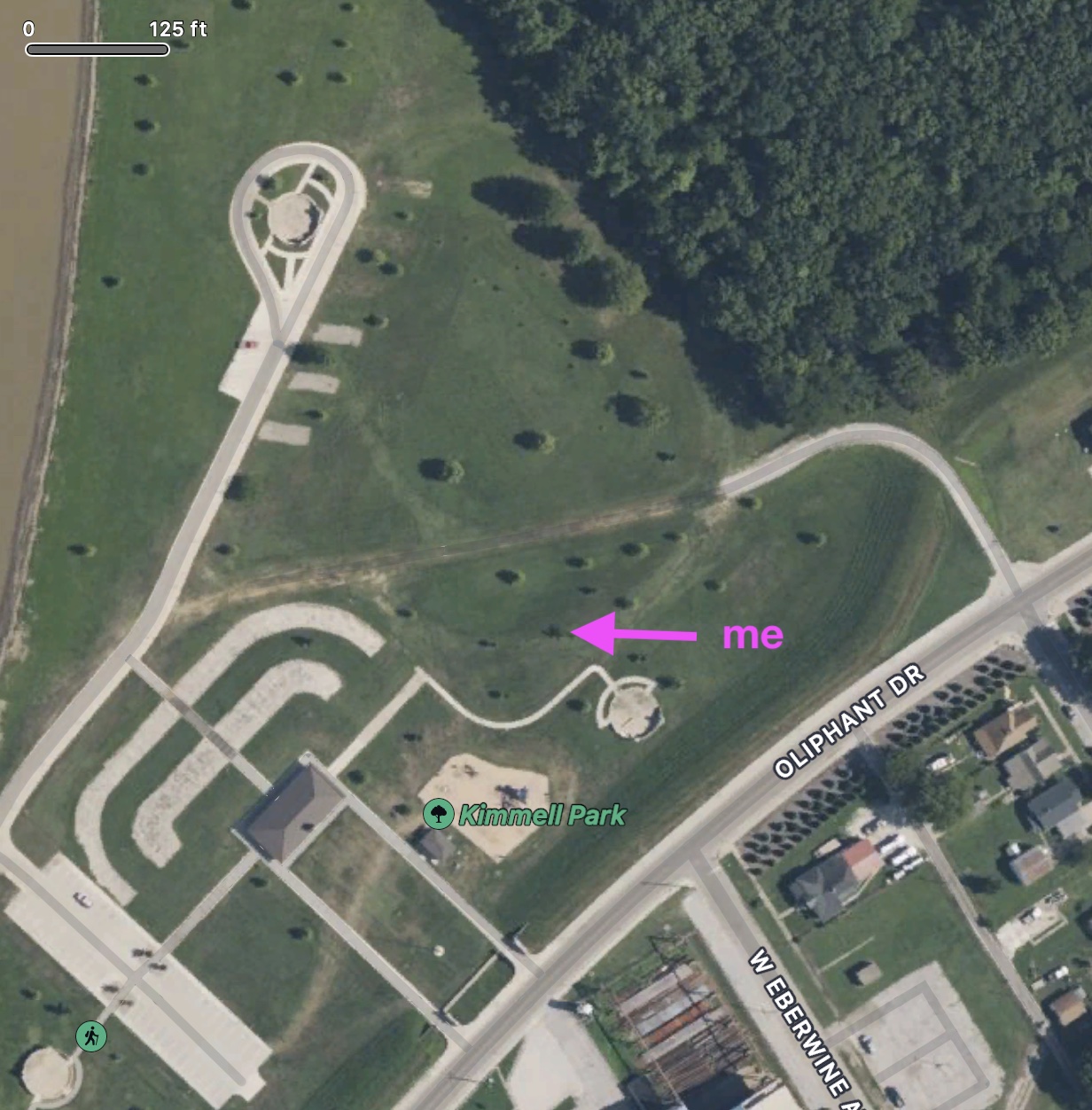

Here’s where I parked and where I sat for the eclipse.

I used the location data for a photo I took to mark the exact location.

Honestly, I think I was at the next tree north and east of where my photo said I was, but I don’t want to argue with GPS.

How does this compare with the centerline of the eclipse path? This morning, I opened Mathematica and used its new SolarEclipse function to mark the path on map that’s more zoomed-in than I could get with Time and Date:

eclipse = SolarEclipse[DateObject[{2024, 4, 8}]];

GeoGraphics[{Red,

SolarEclipse[eclipse, "ShadowAxisLine"],},

GeoCenter -> GeoPosition[{38.69715, -87.51673}],

GeoRange -> Quantity[250, "Meters"], GeoScaleBar -> "Feet",

ImageSize -> Large]

Apparently, the park district has changed the routing of the paved areas, but you can see that I was no more that 300–400 feet from the centerline. Recent rains had left the ground and some of the pavement wet by the peak of the triangle, and the shady areas near the treeline due north of me was already occupied. So I was satisfied with my spot.

There were some thin, wispy clouds, but it was obvious well before the eclipse started that the view would be fantastic. And it was. During the last 10–15 minutes or so before totality, I did nothing but look at the sun (through the glasses I’d kept from 2017), watching as the thin arc of sun got shorter until it disappeared entirely. I pulled off my glasses as a cheer went up and looked into the deep black of the moon surrounded by the white corona.

I did take a quick iPhone photo during totality, more as a reminder than anything else.

Patrick MaCarron—who was also in Vincennes, but I don’t know where—took a great photo of the eclipse, and you should follow the link to see it. It shows two red prominences: a large one near the bottom and a smaller one along the right edge. I saw the one near the bottom with my naked eye; it was a sharp red dot at the bottom of the black circle. I was unsure if I’d really seen it until I saw Patrick’s photo later on Mastodon.

I also found Jupiter (to the right) and Venus (to the left). I didn’t see Mars, Mercury, or that comet that was supposed to be at the edge of unaided visibility. This, I think, is where those wispy clouds came into play. I could see them around Jupiter, sort of how you see clouds on a moonlit night.

I hung around for a while after totality, but watching the Moon retreat isn’t as fun as watching it advance. As I walked back to the park entrance, I stopped to take photos of my shadow on the sidewalk. I made a sort of pinhole by squeezing tight an OK sign. You’ll have to zoom in to see the crescent coming through the small aperture.

Google Maps routed me through Champaign, Illinois, on my way home, so I ordered a Papa Del’s pizza when I was about an hour away and took it home for a late dinner. I got home just as the Men’s National Championship game was starting. I watched it while eating pizza—the perfect end to a perfect day.

Moment diagrams for continuous beams

April 6, 2024 at 1:21 PM by Dr. Drang

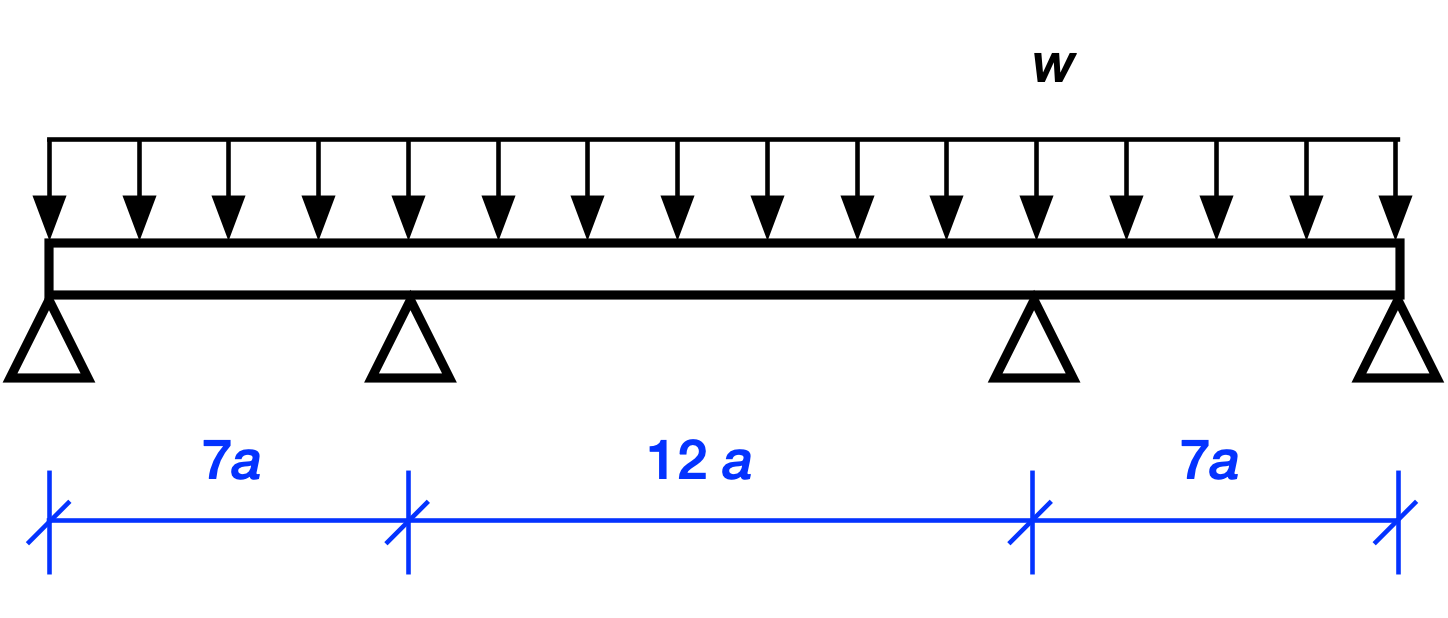

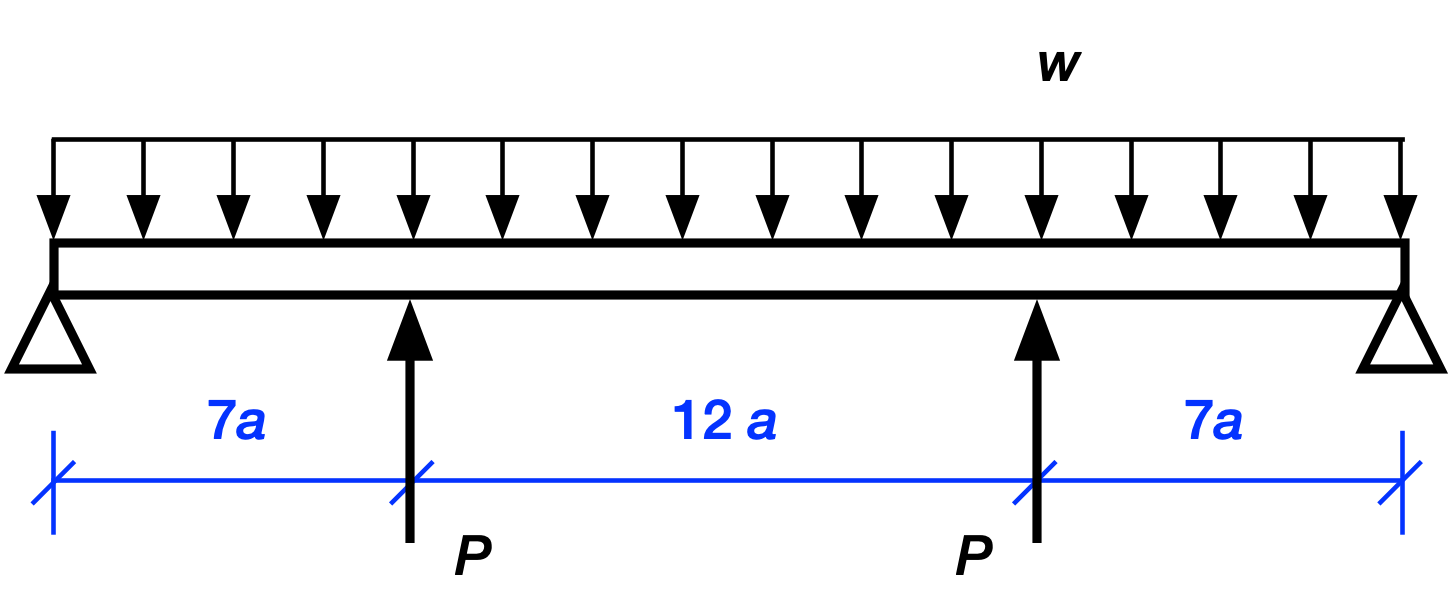

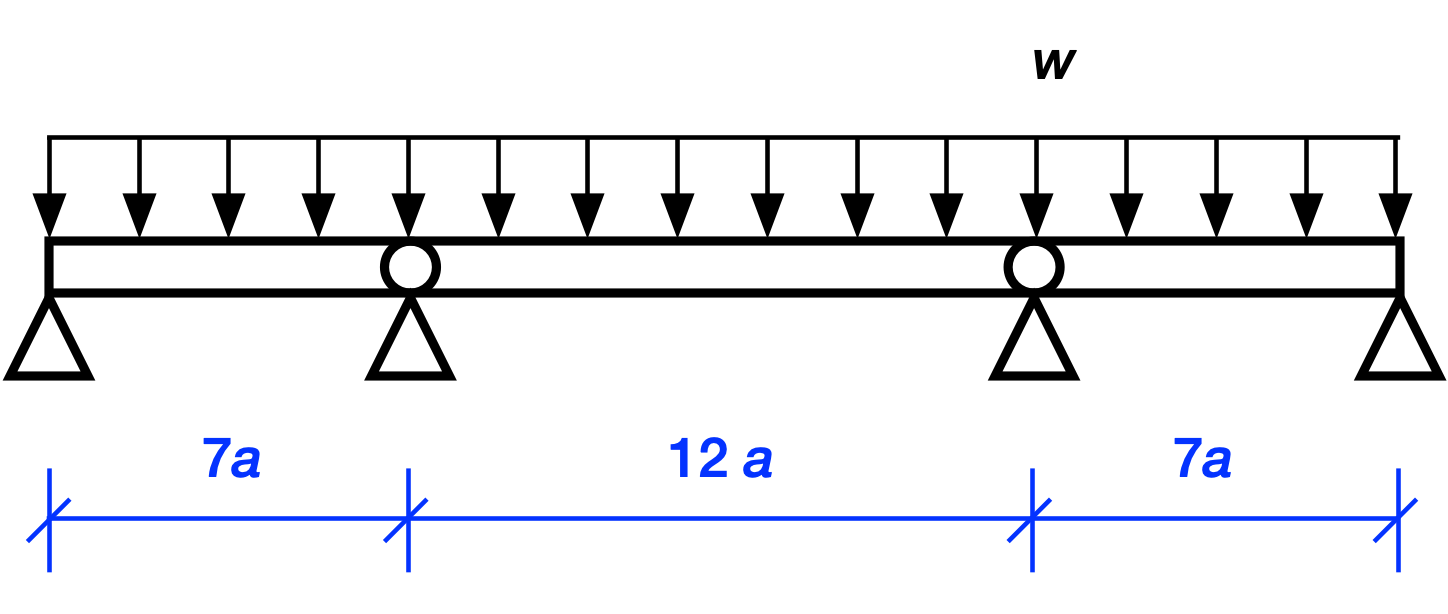

As promised last time, we’ll now work out the solution for this three-span continuous beam with a uniform distributed load.

Because there are four unknown reaction forces at the supports and only two equations of statics1, we can’t solve for the reactions by statics along. This means the structure is statically indeterminate. And because there are two more unknowns than equations, we say that it has two degrees of indeterminacy.

But wait, can’t we use symmetry in addition to statics to solve for the reactions? After all, symmetry tells us that the two interior support reactions must be equal and the two exterior support reactions must also be equal.

Let’s see what we can do with this. The equations of equilibrium for vertical loading and the moment about the left support are

These may look like two equations, but they really aren’t. After some algebra, they become

which are the same equation. So our two equations of statics aren’t independent and we really have one equation with two unknowns. That’s only one degree of indeterminacy, which is an improvement, but still isn’t enough to solve the problem through statics alone. And if you think you can get an independent equation by taking moments about another point, go ahead and try. I’ll be here when you come back.

To solve this problem—and to solve indeterminate problems in general—we have to account for the stiffness of the structure. There are many ways of doing this. If it were 1980 and I were still an undergraduate, I’d probably use moment distribution, because that was the standard way to do problems by hand back then. I suspect that most engineers today would use the finite element method. That’s the most practical solution, but letting your computer do all the work is no fun.

The fun way is to take advantage of symmetry and use Castigliano’s Second Theorem. Alberto Castigliano was a 19th Century Italian engineer whose 1873 thesis, Intorno ai sistemi elastici or Regarding elastic systems2 included his theorems and their proofs. You probably haven’t heard of Castigliano—he’s no Newton or Euler or Bernoulli—but there aren’t many dissertations whose findings are still being taught a century and a half later.

Castigliano’s Second Theorem is based on work and energy principles. Say we have a linearly elastic structure—that’s one where the deflection is a linear function of the loading and the structure springs back when the loads are removed. If we have a set of concentrated external loads acting on the structure, , we can express the strain energy, , as a function of those loads. Then the deflections, , of the points of load application in the directions of the , can be determining from the partial derivatives of the strain energy with respect to the loads:

The strain energy in a beam can be written in several ways. When solving problems by Castigliano’s Second Theorem, we write it in terms of the bending moment like this:

That’s the theory. Now let’s talk about the practice.

The strategy when using Castigliano’s Second Theorem to solve a statically indeterminate problem is the following:

- Remove the “extra” supports, i.e., the ones that are making the structure indeterminate. Since any of the supports could be considered extra, you have some freedom here. With experience, you’ll choose supports that make your calculations easy.

- Replace the supports with the forces (as yet unknown) that act at those supports

- Write out an expression for the strain energy in terms of the replacement forces.

- Take the partial derivatives of the strain energy with respect to those forces.

Set the partial derivatives to zero because the displacements at the supports that were removed are zero, i.e.,

- Solve the equations in Step 5 for the replacement forces.

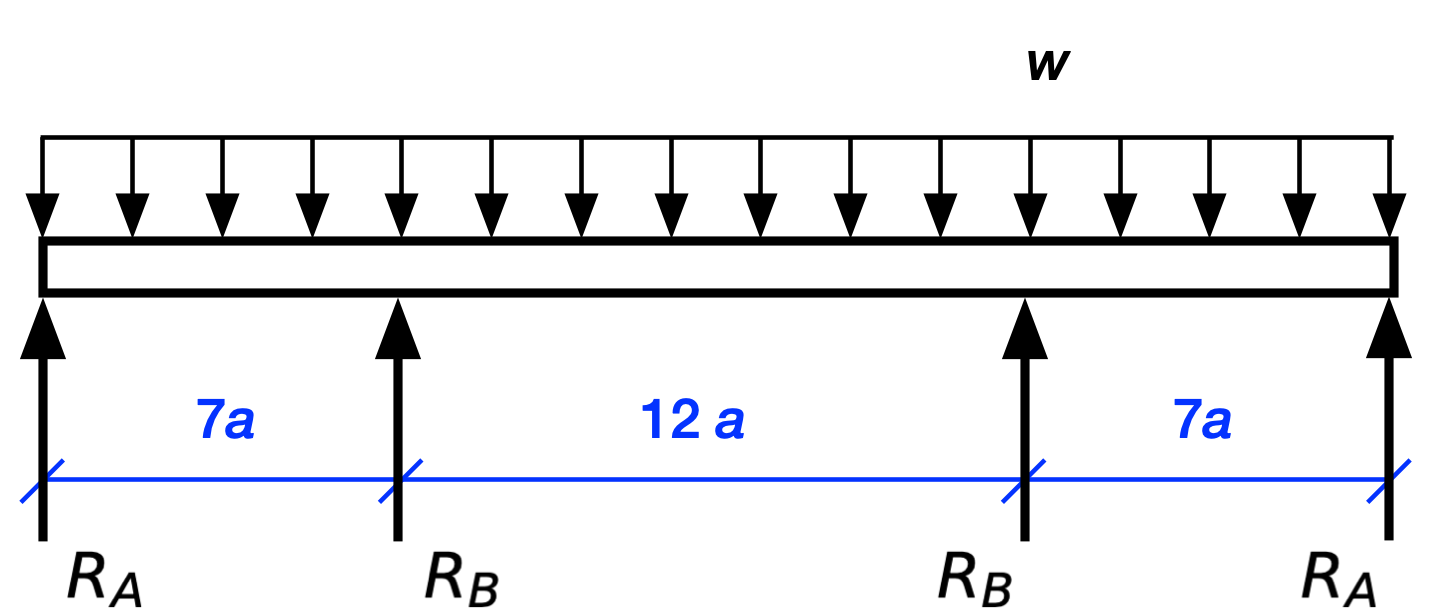

The procedure is easier to show than it is to tell. Here’s our structure with the interior supports replaced by forces.

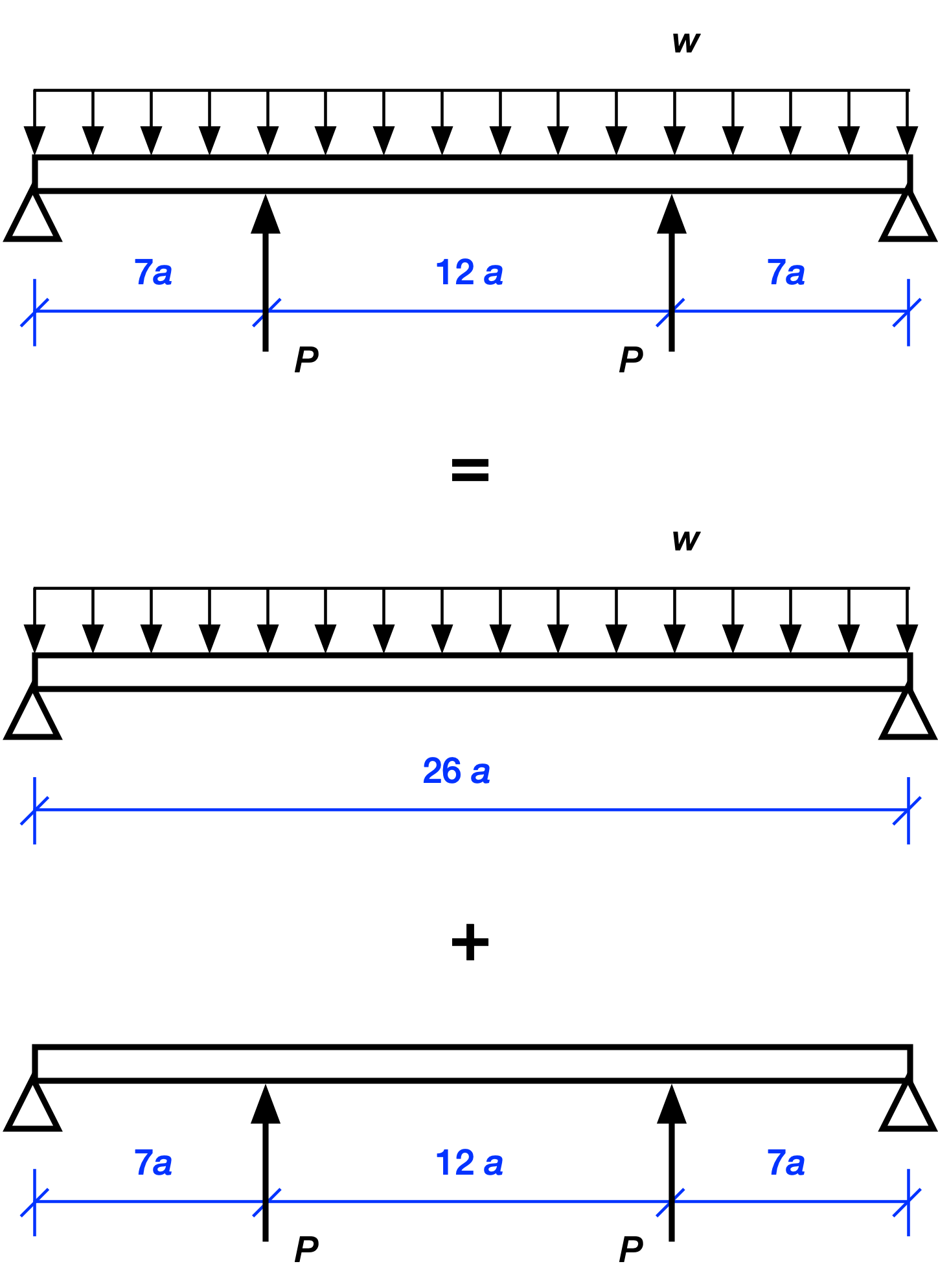

The two interior concentrated loads are equal because of symmetry. Since this is a linearly elastic structure, we can use superposition to analyze this complex loading. That is, we can break the complex loading into two or more simpler loading conditions that are easy to solve. After solving for the simpler loads, we add them together to get the solution for the complex loading. In graphical language, we do this:

Let’s start with the reactions at the ends. Clearly, the upward reaction forces at the end supports are

where the first term is what the reaction would be if only the uniformly distributed load were acting and the second term is what the reaction would be if only the two interior point loads were acting. The sign of the term is negative because the reactions from the s alone would be downward.

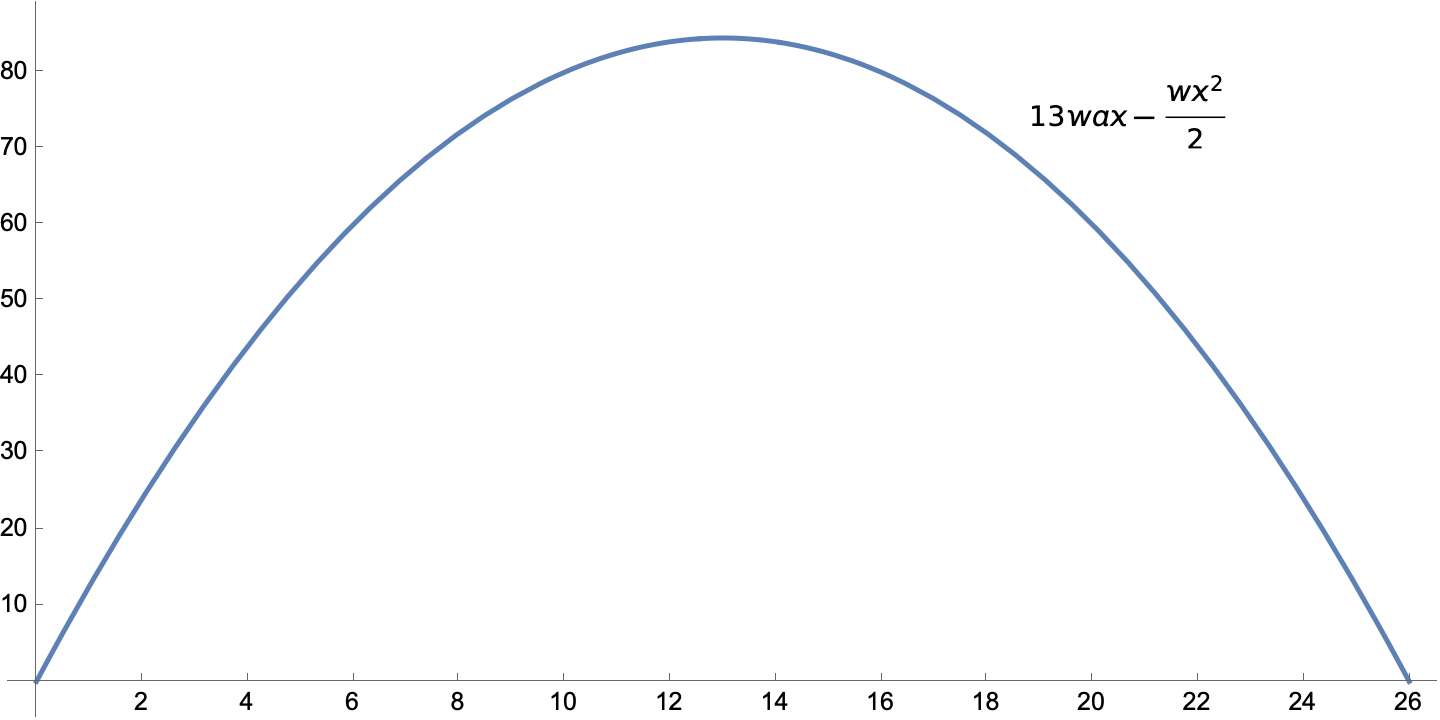

The moment diagrams for two simpler load conditions are this for the uniformly distributed load

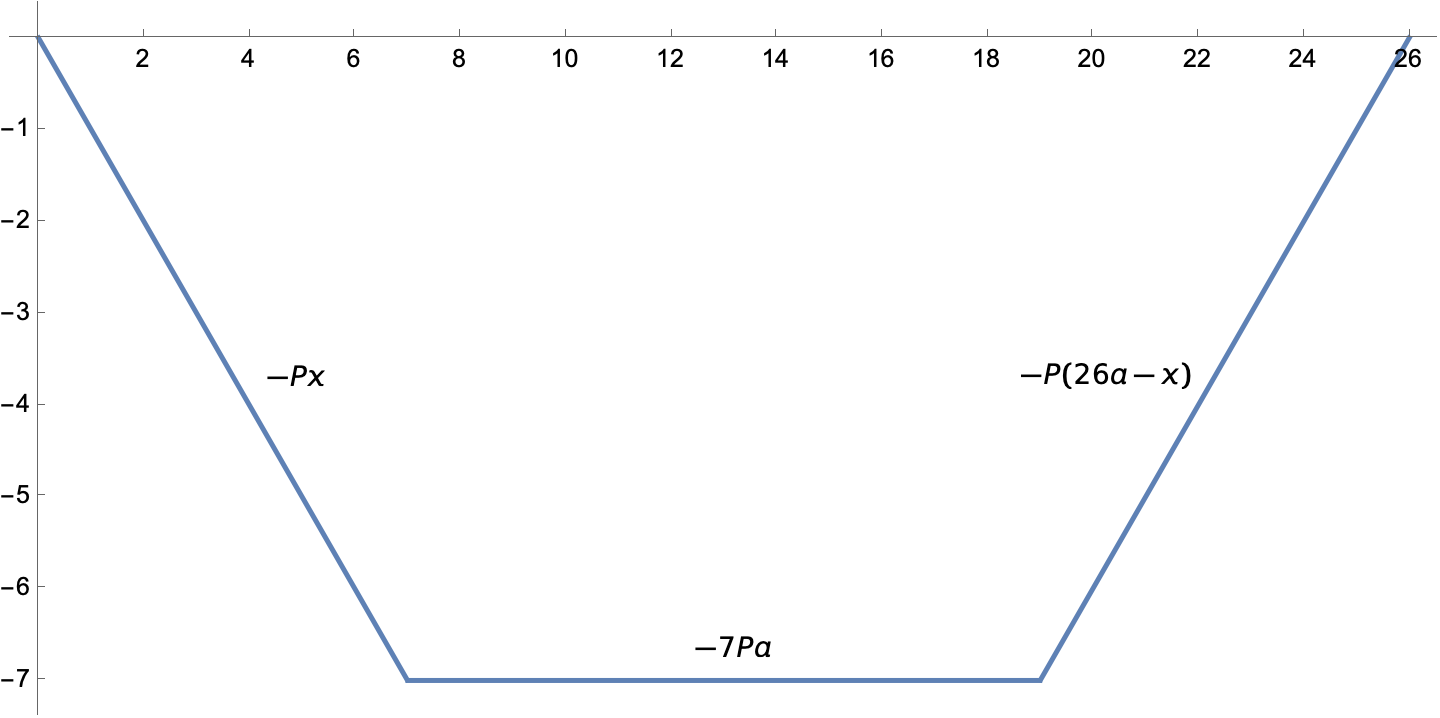

and this for the two concentrated loads.

Concentrated loads put kinks in the moment diagram.

When we add the two moments together, we get this piecewise expression:

For each piece, the first two terms are due to the distributed load and the last term is due to the concentrated loads.

With the bending moment defined in terms of the unknown, , we can use the equation above to get the strain energy. I’m not going to pretend I did it by hand; here’s the Mathematica code,

M1 = 13*w*a*x - w*x^2/2 - P*x;

M2 = 13*w*a*x - w*x^2/2 - 7*a*P;

M3 = 13*w*a*x - w*x^2/2 - (26 a - x)*P;

U = Integrate[M1^2/(2*EI), {x, 0, 7*a}] +

Integrate[M2^2/(2*EI), {x, 7*a, 19*a}] +

Integrate[M3^2/(2*EI), {x, 19*a, 26*a}]

which gives us a strain energy of

When you give Mathematica integer input, it gives you integer or rational output, hence the large numbers in the numerators.

Taking the partial derivative of this with respect to and setting it to zero,

soln = Solve[D[U, P] == 0, P]

gives us

We plug this back into the expression for and get

To plot the moment diagram in Mathematica, we do what we did last time: divide the moment expressions by and introduce a nondimensional length variable, . The code is

m1 = M1/(w*a^2) /. {soln[[1]][[1]], x -> u*a};

m1 = Simplify[m1];

m2 = M2/(w*a^2) /. {soln[[1]][[1]], x -> u*a};

m2 = Simplify[m2];

m3 = M3/(w*a^2) /. {soln[[1]][[1]], x -> u*a};

m3 = Simplify[m3];

Plot[Piecewise[{{m1, u <= 7}, {m2, 7 < u <= 19}, {m3, u > 19}}], {u, 0, 26},

ImageSize -> Large, PlotStyle -> Thick,

Ticks -> {Table[t, {t, 0, 26, 2}], Table[t, {t, -12, 10, 2}]}]

where again the Piecewise function lets us split the function being plotted into pieces. Note also that we’re using the soln expression that came from the solution for to plug that into the various expressions.

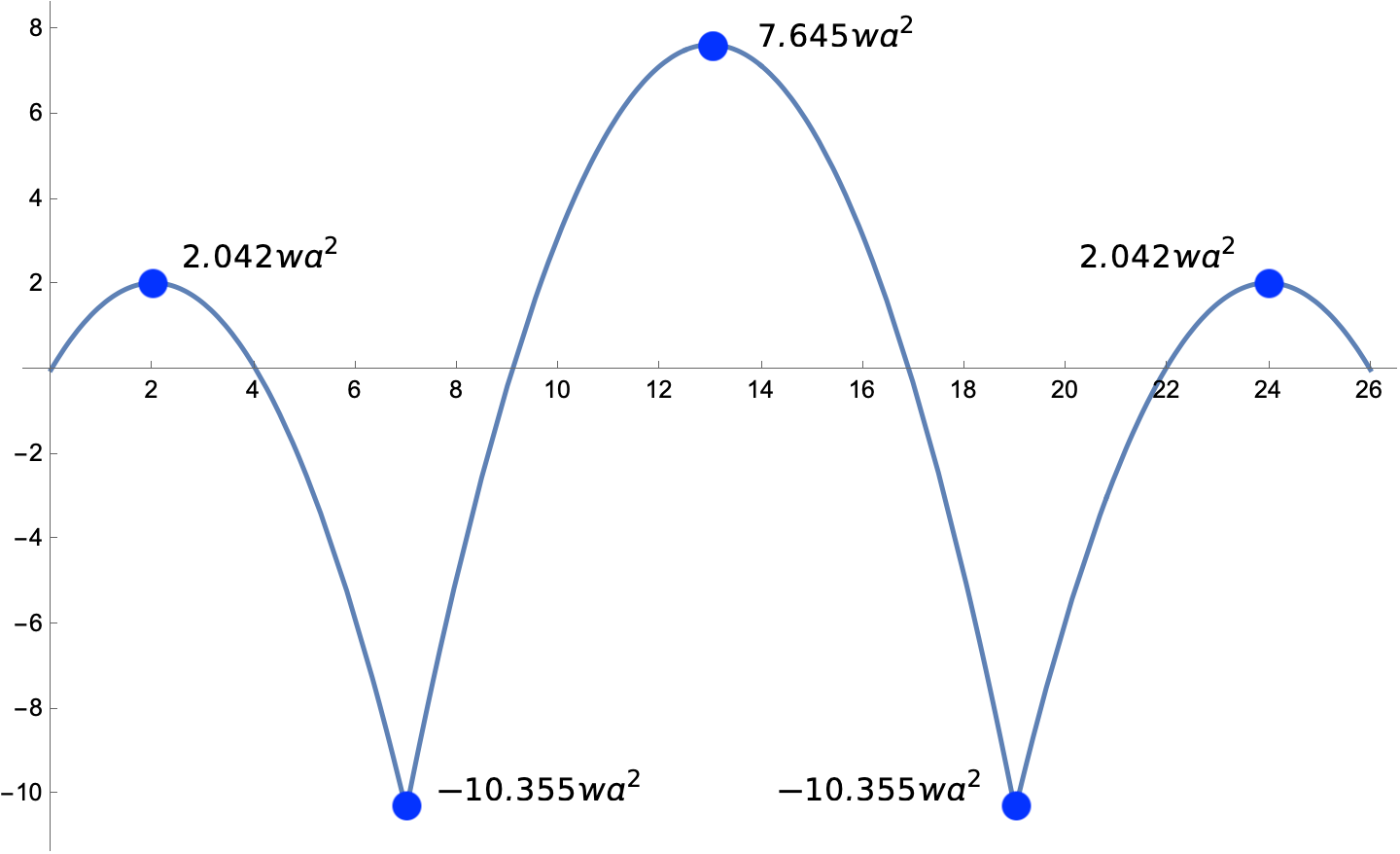

The resulting plot is

except that I added the annotations in Preview.

I’ll spare you the work involved in making the third moment diagram in the Francis Scott Key Bridge post, the one for a beam with the left interior support missing. The process is the same as what we just went through.

I should mention, though, that although this seems complicated when every step is explained, most structural engineers would be able to whip through this analysis quickly. They wouldn’t have wasted time showing that symmetry doesn’t make this problem statically determinate. They would know immediately to use superposition. And they’d draw the two moment diagrams for the simpler problems as fast as their pencil could move. That’s how expertise works.

-

Recall that the horizontal equilibrium equation gives us no information because there are no horizontal forces. ↩

-

Google Translate told me the English translation was Around elastic systems, which seemed off. I thought Google was taking “itorno” too literally; it should be something like “on” or “about.” So I asked Federico Viticci what he thought, and he gave me “regarding” as a good translation of an older Italian usage. Thanks, Federico! ↩

Moment diagrams for simply supported beams

April 3, 2024 at 3:33 PM by Dr. Drang

I thought I’d run through how the moment diagrams in the last post were made. It’ll probably take two articles to get through it all.

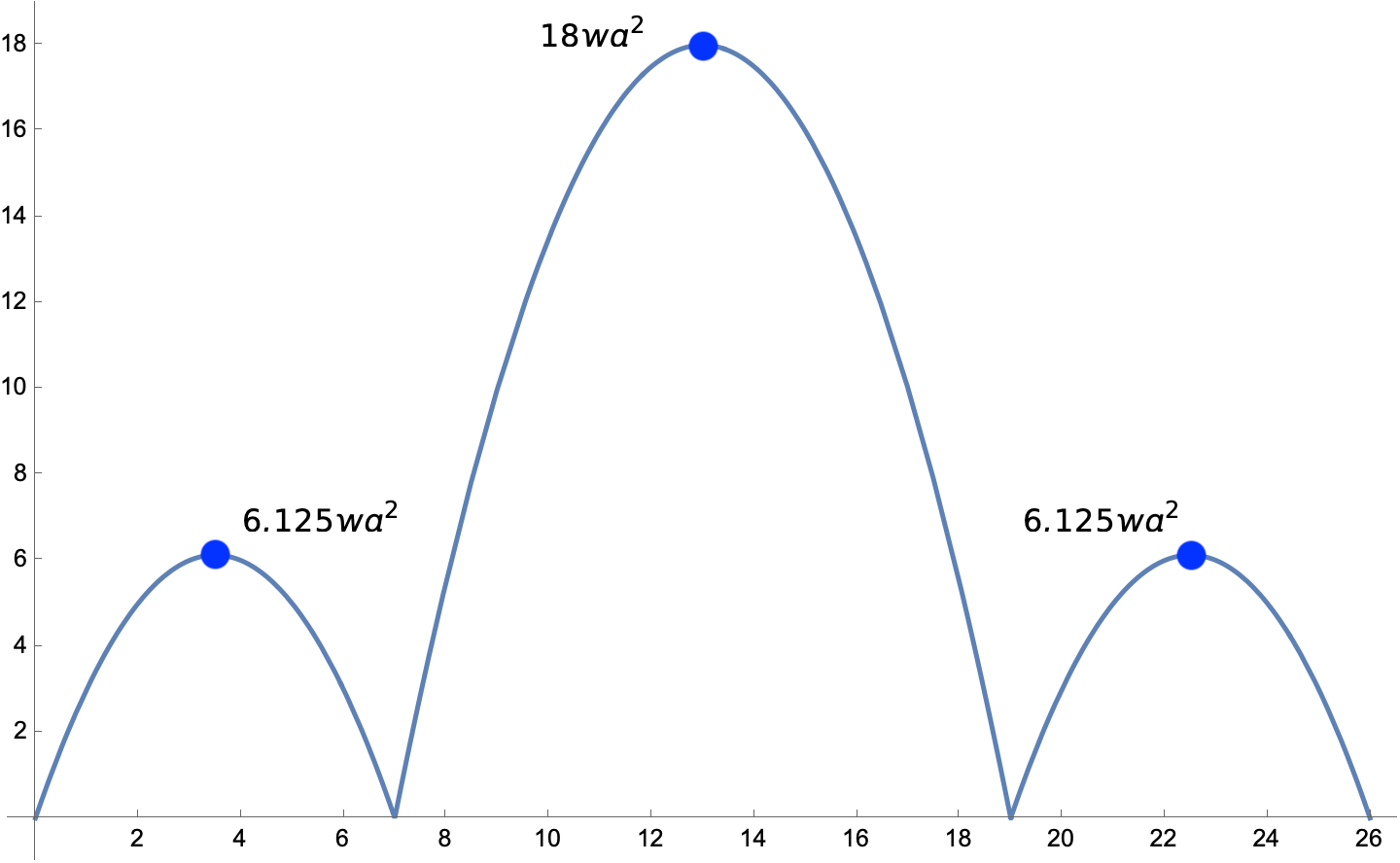

Let’s start with the three simple spans.

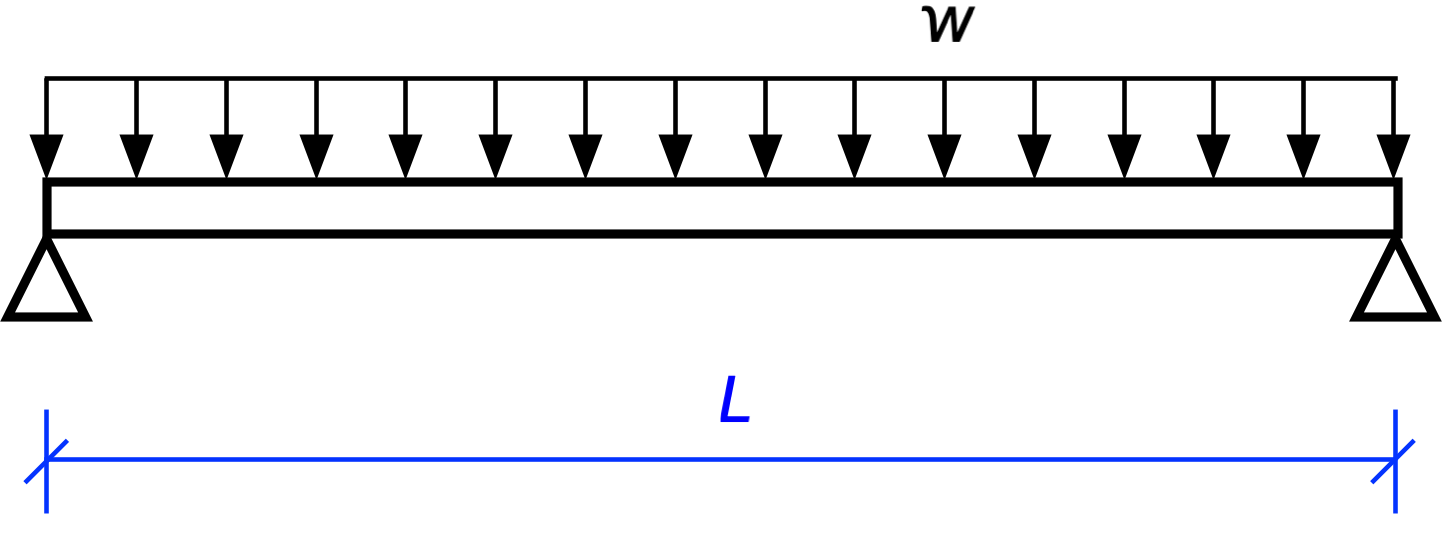

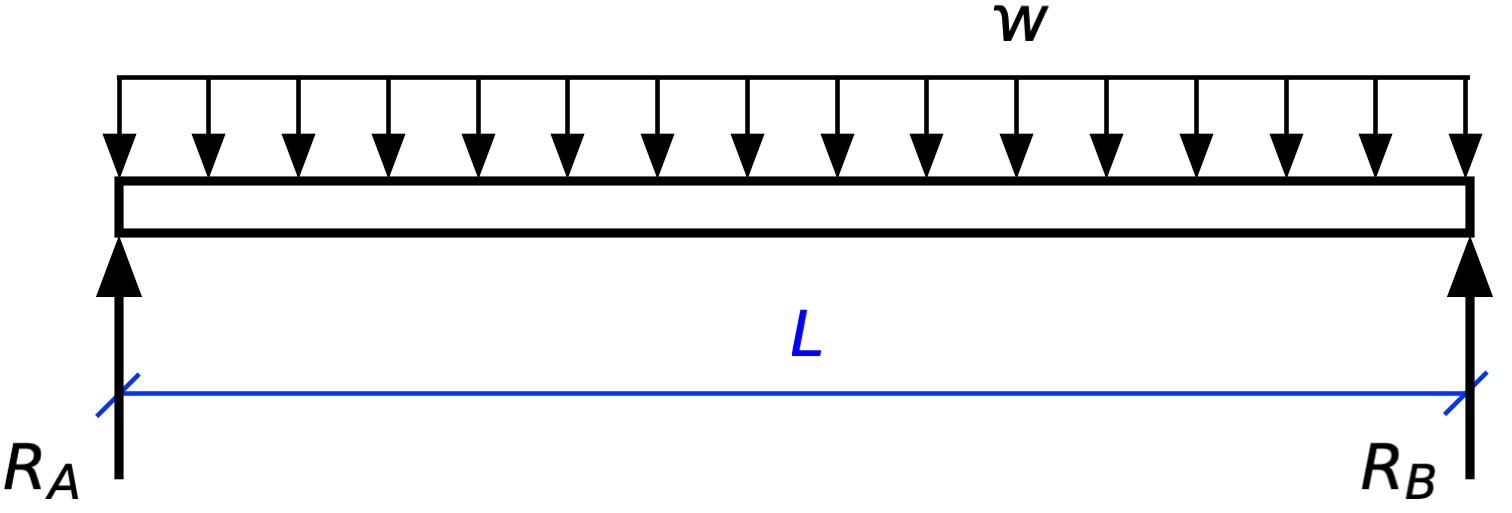

We’ll generate the moment diagram for a single simply supported beam and then apply it to each individual span.

Note here that is the uniform intensity of the load along the beam. It can be measured in pounds per foot, kilonewtons per meter, or any units that work out to force per length. The total load on beam is .

We start by drawing the free body diagram for the beam, replacing the supports with their associated reaction forces.

There are two unknowns, and , and two equations of statics:1

The first represents the sum of all the forces in the vertical direction, and the second represents the sum of all the moments about the left end of the beam (Point A). The solution to these two equations is

You could have gotten this same answer with no algebra by taking account of symmetry. Because the structure is symmetric and the loading is symmetric, the reaction forces must also be symmetric.2 (I guess there’s a little algebra because you need to know that the two equal reactions add up to , but that really doesn’t count, does it?)

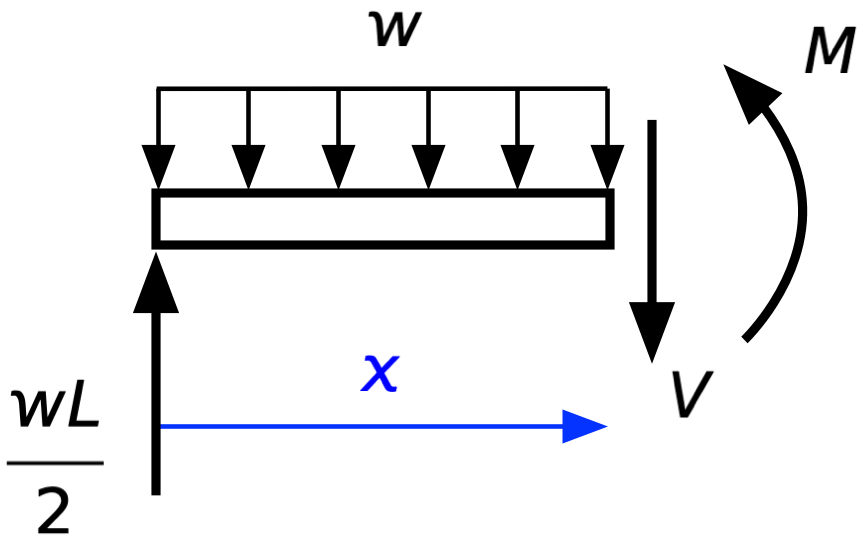

With the reaction forces determined, we move on to determining the bending moment within the beam. When a structure is in equilibrium, every portion of it is in equilibrium. So we can consider just part of the beam, a section of length starting at the left end, and draw its free body diagram:

Because we have conceptually cut the beam at a distance from the left end, we’ve exposed the internal shear, , and moment, , and can write the equations of statics for this portion of the beam.

In the second equation, we’re taking moments about the right end of this portion.

Solving these two equations gives us

and

Although the shear matters in beam design, the moment is typically more important, so we’ll focus on that. The equation for is that of a parabola, with at and and rising to a peak of

at midspan, .

For the middle span of the three-span bridge, , so

at its center. And for the two side spans, , so

at their centers. These are the labeled dots in the moment diagram at the top of the post.

To make the moment diagram in Mathematica,3 we have to start by taking the generic equation for and rewriting it as three equations that work in the individual spans, where the origin of the coordinate is at the left end. That will look like this:

To get Mathematica to plot this, we need to nondimensionalize the moment and the horizontal coordinate so we’re plotting pure numbers, with no or terms left in the expressions. We do that by dividing through by to nondimensionalize and by introducing a new variable, , to nondimensionalize the horizontal coordinate. That gives us

Now we’re ready to move to Mathematica for the plotting.

First, we define three s, one for each span, and then use the Piecewise function within Plot to get a moment diagram that covers all three spans:

m1 = 1/2*u*(7 - u);

m2 = (u - 7)/2*(12 - (u - 7));

m3 = (u - 19)/2*(7 - (u - 19));

Plot[Piecewise[{{m1, u <= 7}, {m2, 7 < u <= 19}, {m3, u > 19}}],

{u, 0, 26},

ImageSize -> Large,

PlotStyle -> Thick,

Ticks -> {Table[t, {t, 0, 18, 2}], Table[t, {t, 0, 26, 2}]}]

That gives us the moment diagram shown at the top of the post, except for the dots and the labels at the peaks of the parabolas. I added those “by hand” in Preview.

Simply supported beams are called “statically determinate” structures because we can determine all the internal and external forces through statics alone. That won’t be the case for the three-span continuous beam, which we’ll analyze in the next post.

-

Normally there would be three equations of statics for a plane problem like this, but because there are no forces in the horizontal direction, the horizontal equilibrium equation gives us no information. It’s just 0 = 0. ↩

-

“Symmetric” here means symmetric about the midspan of the beam. In other words, the right half of the beam is a mirror image of the left half. ↩

-

I could’ve plotted it with Python and Matplotlib, but I want to learn more about Mathematica, so I used this as an opportunity to do so. ↩