Implementing JSON Feed

May 21, 2017 at 12:47 PM by Dr. Drang

Unlike many “small” programming projects I’ve taken on, implementing JSON Feed—from both the publishing and aggregating ends—really has been quick and easy. Let me show you.

Publishing

Most bloggers use some sort of publicly accessible publishing framework—WordPress, Blogger, Squarespace, Jekyll, Ghost, whatever—so all the components needed to publish a JSON feed are already built into the underlying API. That’s true for ANIAT, too, but because I built its publishing system from the ground up, I’ll need to explain a bit about how it’s structured before getting to the JSON Feed coding.

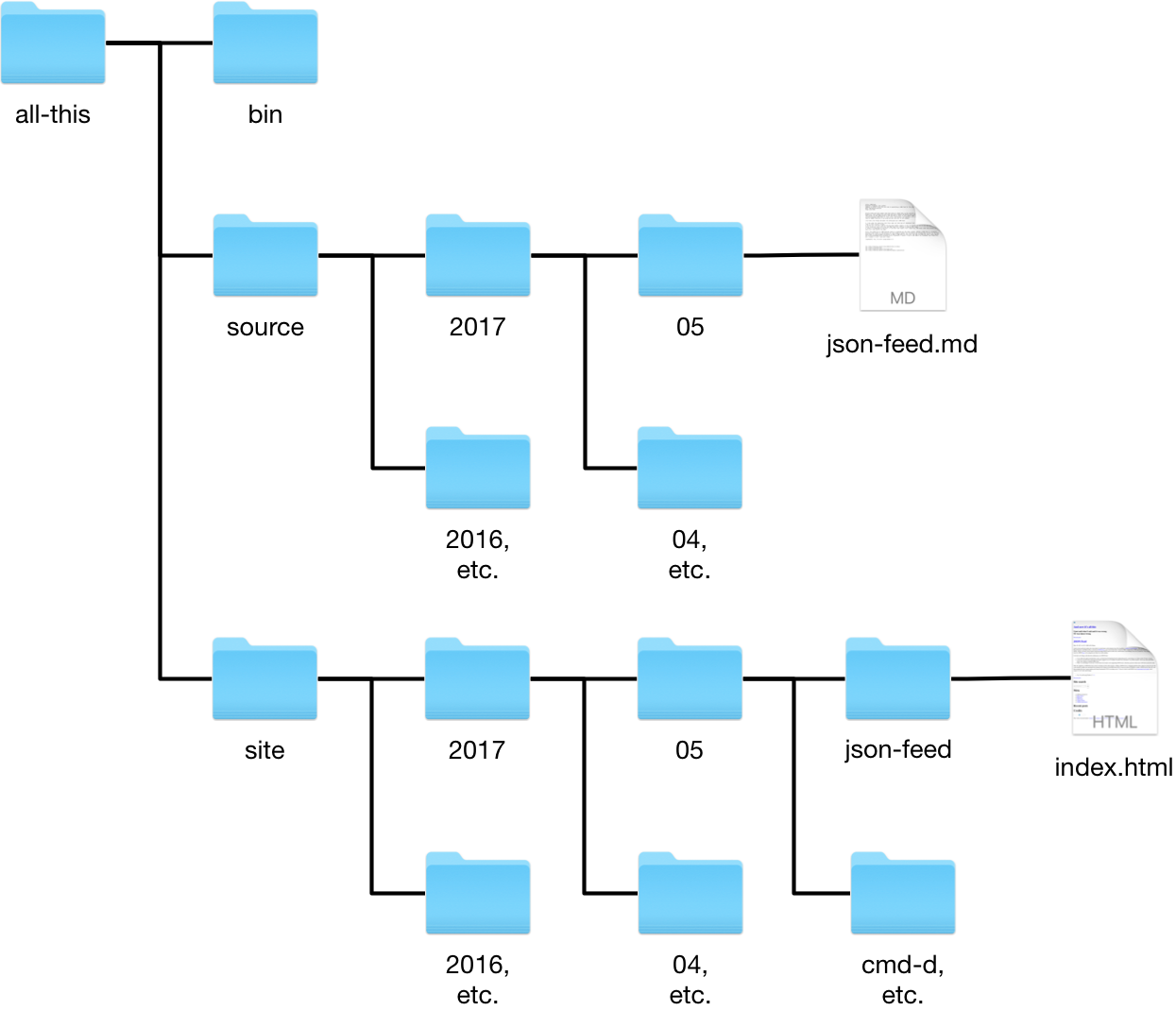

Here’s the directory layout:

The bin directory contains all the scripts that build the blog; the source directory contains year and month subdirectories which lead to the Markdown source files; and the site directory contains the same year and month subdirectories, leading to a directory and index.html file for each post.1 The site directory is what gets synced to the server when I write a new post.

Each Markdown source file starts with a header. Here’s an example:

Title: JSON Feed

Keywords: blogging, json, python

Summary: In which I join the cool kids in generating a JSON feed for the site.

Date: 2017-05-20 10:31:36

Slug: json-feed

Article feeds and feed readers have been kind of a dead topic on the

internet since the scramble to [replace Google Reader][1]. That

changed this past week when Brent Simmons and Manton Reece announced

[JSON Feed][2], a new feed format that avoids the XML complexities of

RSS and Atom in favor of very simple JSON…

The source directory also contains a file called all-posts.txt, which acts as a sort of database for the entire site. Each line contains the info for a single post. Here are the last several lines of all-posts.txt:

2017-05-02 23:04:26 engineering-and-yellow-lights Engineering and yellow lights

2017-05-11 10:33:57 rewriting Rewriting

2017-05-13 06:26:36 scene-from-a-marriage Scene from a marriage

2017-05-13 22:29:49 tables-again Tables again

2017-05-16 21:47:59 cmd-d CMD-D

2017-05-18 21:55:07 70s-textbook-design 70s textbook design

2017-05-20 10:31:36 json-feed JSON Feed

As you can see, this is mostly a repository for the meta-information from each post’s header.

With this mind, we can now look at the script, buildJFeed, that generates the JSON feed file. It’s run via this pipeline from within the bin folder:

tail -12 ../source/all-posts.txt | tac | ./buildJFeed > ../site/feed.json

It takes the most recent 12 posts, which are listed at the bottom of the all-posts.txt file, reverses their order (tac is cat spelled backwards), builds the JSON feed from those lines, and puts the result in the site folder for syncing to the server.

Here’s buildJFeed:

python:

1: #!/usr/bin/python

2: # coding=utf8

3:

4: from bs4 import BeautifulSoup

5: from datetime import datetime

6: import pytz

7: import sys

8: import json

9:

10: def convertDate(dstring):

11: "Convert date/time string from my format to RFC 3339 format."

12: homeTZ = pytz.timezone('US/Central')

13: utc = pytz.utc

14: t = homeTZ.localize(datetime.strptime(dstring, "%Y-%m-%d %H:%M:%S")).astimezone(utc)

15: return t.strftime('%Y-%m-%dT%H:%M:%S+00:00')

16:

17:

18: # Build the list of articles from lines in the all-posts.txt file,

19: # which will be fed to the script via stdin.

20: items = []

21: pageInfo = sys.stdin.readlines()

22: for i, pi in enumerate(pageInfo):

23: (date, time, slug, title) = pi.split(None, 3)

24:

25: # The partial path is year/month.

26: path = date[:-3].replace('-', '/')

27:

28: # Get the title, date, and URL from the article info.

29: title = title.decode('utf8').strip()

30: date = convertDate('{} {}'.format(date, time))

31: link = 'http://leancrew.com/all-this/{}/{}/'.format(path, slug)

32:

33: # Get the summary from the header of the Markdown source.

34: mdsource = '../source/{}/{}.md'.format(path, slug)

35: md = open(mdsource).read()

36: header = md.split('\n\n', 1)[0]

37: hlines = header.split('\n')

38: summary = ''

39: for line in hlines:

40: k, v = line.split(':', 1)

41: if k == 'Summary':

42: summary = v.decode('utf8').strip()

43: break

44:

45: # Get the full content from the html of the article.

46: index = '../site/{}/{}/index.html'.format(path, slug)

47: html = open(index).read()

48: soup = BeautifulSoup(html)

49: content = soup.find('div', {'id': 'content'})

50: navs = content.findAll('p', {'class': 'navigation'})

51: for t in navs:

52: t.extract()

53: content.find('h1').extract()

54: content.find('p', {'class': 'postdate'}).extract()

55: body = content.renderContents().decode('utf8').strip()

56: body += '<br />\n<p>[If the formatting looks odd in your feed reader, <a href="{}">visit the original article</a>]</p>'.format(link)

57:

58: # Create the item entry and add it to the list.

59: items.append({'id':link,

60: 'url':link,

61: 'title':title,

62: 'summary':summary,

63: 'content_html':body,

64: 'date_published':date,

65: 'author':{'name':'Dr. Drang'}})

66:

67: # Build the overall feed.

68: feed = {'version':'https://jsonfeed.org/version/1',

69: 'title':u'And now it’s all this',

70: 'home_page_url':'http://leancrew.com/all-this/',

71: 'feed_url':'http://leancrew.com/all-this/feed.json',

72: 'description':'I just said what I said and it was wrong. Or was taken wrong.',

73: 'icon':'http://leancrew.com/all-this/resources/snowman-200.png',

74: 'items':items}

75:

76: # Print the feed.

77: print json.dumps(feed, ensure_ascii=False).encode('utf8')

Most of the script is taken up with gathering the necessary information from the various files described above, because I don’t have an API to do that for me.

Line 21 reads the 12 lines from standard input into a list. Line 22 starts the loop through that list. First, the line is broken into parts in Line 23. Then Line 26 uses the year and month parts of the date to create the path to the Markdown source file and part of the path to the corresponding HTML file.

Lines 29–31 get the title, publication date, and URL of the post by rearranging and modifying the various parts that were gathered in Line 23. The convertDate function, defined in Lines 10–15, uses the datetime and pytz libraries to change the local date and time I use in my posts,

2017-05-20 10:31:36

into a UTC timestamp in the format JSON Feed wants,

2017-05-20T10:31:36+00:00

Lines 34–43 read the Markdown source file and pull out the Summary field from the header. Then lines 46–55 read the HTML file and use the Beautiful Soup library to extract just the content of the post. Line 56 adds a line to the end of the content with a link back to the post here.

Those 56 lines are basically what I had already written long ago to construct the RSS feed for the site. The only difference is in the formatting of the publication date. Now we come to the part that’s new. Lines 59–65 add a new dictionary to the end of the items list (remember, we’re still inside the loop that started on Line 22), using the keys from the JSON Feed spec and the values we just gathered.

Finally, once we’re out of the loop, Lines 68–74 build the dictionary that will define the overall feed. The keys are again from the JSON Feed spec, and the values are fixed except, of course, for the items list at the end. Line 77 then uses the magic of the json library to print the feed dictionary in JSON format.

Although I’ve gone on at some length here, the only new parts are the trivial lines 59–65, 68–74, and 77. They replaced the complicated and ugly XML template strings in the RSS feed building script. The item template string is

python:

# Template for an individual item.

itemTemplate = '''<item>

<title>%s</title>

<link>%s</link>

<pubDate>%s</pubDate>

<dc:creator>

<![CDATA[Dr. Drang]]>

</dc:creator>

<guid>%s</guid>

<description>

<![CDATA[%s]]>

</description>

<content:encoded>

<![CDATA[%s

<br />

<p>[If the formatting looks odd in your feed reader, <a href="%s">visit the original article</a>]</p>]]>

</content:encoded>

</item>'''

and the overall feed template is

python:

# Build and print the overall feed.

feed = '''<?xml version="1.0" encoding="UTF-8"?><rss version="2.0"

xmlns:content="http://purl.org/rss/1.0/modules/content/"

xmlns:wfw="http://wellformedweb.org/CommentAPI/"

xmlns:dc="http://purl.org/dc/elements/1.1/"

xmlns:atom="http://www.w3.org/2005/Atom"

xmlns:sy="http://purl.org/rss/1.0/modules/syndication/"

xmlns:slash="http://purl.org/rss/1.0/modules/slash/"

>

<channel>

<title>And now it’s all this</title>

<atom:link href="http://leancrew.com/all-this/feed/" rel="self" type="application/rss+xml" />

<link>http://leancrew.com/all-this</link>

<description>I just said what I said and it was wrong. Or was taken wrong.</description>

<lastBuildDate>%s</lastBuildDate>

<language>en-US</language>

<sy:updatePeriod>hourly</sy:updatePeriod>

<sy:updateFrequency>1</sy:updateFrequency>

<generator>http://wordpress.org/?v=4.0</generator>

<atom:link rel="hub" href="http://pubsubhubbub.appspot.com"/>

<atom:link rel="hub" href="http://aniat.superfeedr.com"/>

%s

</channel>

</rss>

'''

And now you see why people like JSON Feed. RSS/Atom, while more powerful and flexible because of its XML roots, is a mess.

(By the way, I did try to use a library instead of template strings to build the RSS feed. It was horrible, and I didn’t feel like spending the rest of my life learning how to use it. Templates worked and I understood them.)

Aggregation

When Google Reader was shuttered, I tried a few other feed readers and eventually decided to build my own. I’ve been using it for about a year and a half now, and I have no regrets. It’s based on a script, dayfeed, that runs periodically on my server, gets all of today’s posts on the sites I subscribe to, and writes a static HTML file with their contents in reverse chronological order. The static file is bookmarked on my computers, phone, and iPad.

The script is a mess, mainly because RSS feeds are a mess, and I had to add lots of special cases. The flexibility of XML leads to much unnecessary variability. Brent Simmons’s involvement in the JSON Feed project is probably an attempt to quell the nightmares he still has from his days developing NetNewsWire.

Of course, by adding JSON Feed support to my script, I’ve made it more complex. But at least the additions are themselves pretty simple. At the top of the script, I now have a list of JSON Feed subscriptions.

python:

jsonsubscriptions = [

'http://leancrew.com/all-this/feed.json',

'https://daringfireball.net/feeds/json',

'https://sixcolors.com/feed.json',

'https://www.robjwells.com/feed.json']

And there’s now a section that loops through jsonsubscriptions and extracts today’s posts from them.

python:

for s in jsonsubscriptions:

feed = urllib2.urlopen(s).read()

jfeed = json.loads(feed)

blog = jfeed['title']

for i in jfeed['items']:

try:

when = i['date_published']

except KeyError:

when = i['date_modified']

when = dp.parse(when)

# Add item only if it's from "today."

if when > start:

try:

author = ' ({})'.format(i['author']['name'])

except:

author = ''

title = i['title']

link = i['url']

body = i['content_html']

posts.append((when, blog, title, link, body, "{:04d}".format(n), author))

n += 1

Before this section, posts is defined as a list of tuples, start is a datetime object that defines the “beginning” of the day (which I’ve defined as 10:00 PM local time of the previous day), and n is a counter that helps me generate a table of contents at the top of the aggregation page.

The loop is pretty straightforward. It starts by reading in the feed and turning it into a Python dictionary via the json.loads function. This is basically the opposite of the json.dumps function used in buildJFeed. It pulls out the name of the blog and then loops through all the items. If the date of the item (date_published if present, date_modified otherwise2) is later than start, we append a tuple consisting of the date, blog name, post title, URL, post body, counter, and author to the posts list.

Later in the script, the RSS/Atom feeds are looped through and parsed using the feedparser library. This takes some of the pain out of parsing the XML, but the RSS/Atom section of the script is still nearly twice as long as the JSON section. After all the subscriptions are processed, an HTML file is generated with an internal CSS section to handle adaptive display of the feeds on devices of different sizes.

Summary

JSON Feed, for all its advantages, may be a flash in the pan. Not only do bloggers and publishing platforms have to adopt it, so do the major aggregator/reader services like Feedly and Digg and the analytics services like FeedPress and FeedBurner. But even if JSON Feed doesn’t take off, the time I spent adding it to my blog and aggregator was so short I won’t regret it.

-

Why not just have HTML files directly in the month folders, mimicking the layout of the

sourcedirectory? This site used to be a WordPress blog, and its permalinks were structuredyear/month/title/. When I built the current publishing system, I wanted all the old URLs to still work without redirects. ↩ -

In theory, there could be no date for the item, but that doesn’t make much sense for blogs and wouldn’t fit in with my goal of showing today’s posts only. ↩