Making feedparser more tolerant

May 28, 2016 at 4:12 PM by Dr. Drang

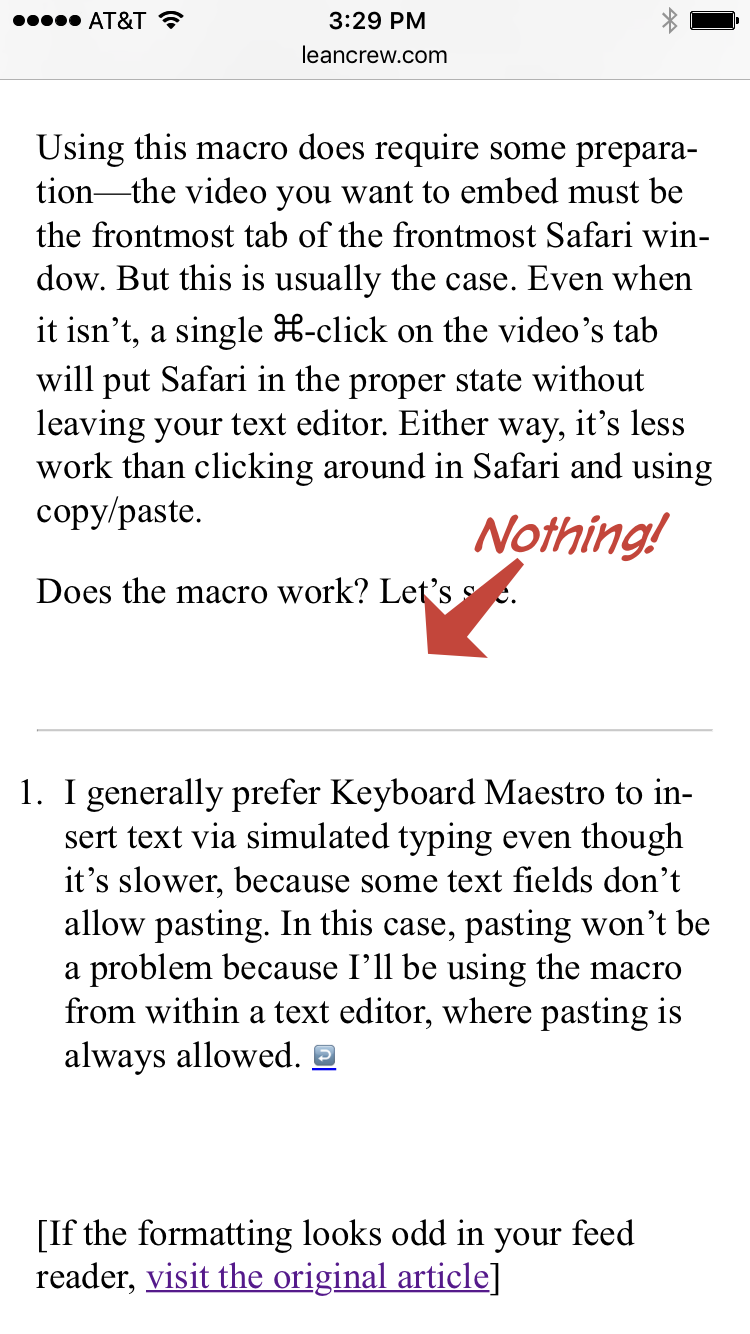

My last post ended with a Monty Python video from YouTube. The video displayed and played perfectly here on ANIAT, and it probably did the same in professionally programmed feed readers, but it didn’t show up at all in my homemade RSS aggregator.

This isn’t unique to videos posted here. Regardless of the site, embedded videos almost never appear in the aggregator. In the six months or so that I’ve been using it, I’ve gotten around this problem by simply clicking a link and going to the original article. But I had a little time today and decided to dig in and fix the problem. It required only one new line of code.

My aggregator is written in Python, and it uses the feedparser library to intelligently parse all the many types of feeds and put them into a common, clean, and simple format that’s easy to work with. As it happens, though, feedparser sanitizes the HTML content of a feed to reduce the possibility of security risks getting through. Some of the HTML elements it filters out are elements commonly used for embedding videos.

The solution came from reading this older post at Rumproarious and the answer to this Stack Overflow question. You can add to feedparser’s set of acceptable elements early in the script and they’ll make it through the parsing step without being sanitized away.

The line I added before the parsing was this:

fp._HTMLSanitizer.acceptable_elements |= {'object', 'embed', 'iframe'}

This adds three HTML elements to feedparser’s whitelist. Because acceptable_elements is a set, the addition is really a union, hence the |=.

Now feeds look the way they should in the aggregator.

There is some risk to this, of course, but I’m trusting that those of you whose feeds I subscribe to won’t start adding malicious code to your feeds.