My feed reading system

February 4, 2018 at 12:52 PM by Dr. Drang

As promised, or threatened, here’s my setup for RSS feed reading. It consists of a few scripts that run periodically throughout the day on a server I control and which is accessible to me from any browser on any device. The idea is to have a system that fits the way I read and doesn’t rely on any particular service or company. If my current web host went out of business tomorrow, I could move this system to another and be back up and running in an hour or so—less time than it would take to research and decide on a new feed reading service.

The linchpin of the system is the getfeeds script:

python:

1: #!/usr/bin/env python

2: # coding=utf8

3:

4: import feedparser as fp

5: import time

6: from datetime import datetime, timedelta

7: import pytz

8: from collections import defaultdict

9: import sys

10: import dateutil.parser as dp

11: import urllib2

12: import json

13: import sqlite3

14: import urllib

15:

16: def addItem(db, blog, id):

17: add = 'insert into items (blog, id) values (?, ?)'

18: db.execute(add, (blog, id))

19: db.commit()

20:

21: jsonsubscriptions = [

22: 'http://leancrew.com/all-this/feed.json',

23: 'https://daringfireball.net/feeds/json',

24: 'https://sixcolors.com/feed.json',

25: 'https://www.robjwells.com/feed.json',

26: 'http://inessential.com/feed.json',

27: 'https://macstories.net/feed/json']

28:

29: xmlsubscriptions = [

30: 'http://feedpress.me/512pixels',

31: 'http://alicublog.blogspot.com/feeds/posts/default',

32: 'http://blog.ashleynh.me/feed',

33: 'http://www.betalogue.com/feed/',

34: 'http://bitsplitting.org/feed/',

35: 'https://kieranhealy.org/blog/index.xml',

36: 'http://blueplaid.net/news?format=rss',

37: 'http://brett.trpstra.net/brettterpstra',

38: 'http://feeds.feedburner.com/NerdGap',

39: 'http://www.libertypages.com/clarktech/?feed=rss2',

40: 'http://feeds.feedburner.com/CommonplaceCartography',

41: 'http://kk.org/cooltools/feed',

42: 'https://david-smith.org/atom.xml',

43: 'http://feeds.feedburner.com/drbunsenblog',

44: 'http://stratechery.com/feed/',

45: 'http://feeds.feedburner.com/IgnoreTheCode',

46: 'http://indiestack.com/feed/',

47: 'http://feeds.feedburner.com/theendeavour',

48: 'http://feed.katiefloyd.me/',

49: 'http://feeds.feedburner.com/KevinDrum',

50: 'http://www.kungfugrippe.com/rss',

51: 'http://www.caseyliss.com/rss',

52: 'http://www.macdrifter.com/feeds/all.atom.xml',

53: 'http://mackenab.com/feed',

54: 'http://macsparky.com/blog?format=rss',

55: 'http://www.marco.org/rss',

56: 'http://themindfulbit.com/feed.xml',

57: 'http://merrillmarkoe.com/feed',

58: 'http://mjtsai.com/blog/feed/',

59: 'http://feeds.feedburner.com/mygeekdaddy',

60: 'https://nathangrigg.com/feed/all.rss',

61: 'http://onethingwell.org/rss',

62: 'http://www.practicallyefficient.com/feed.xml',

63: 'http://www.red-sweater.com/blog/feed/',

64: 'http://blog.rtwilson.com/feed/',

65: 'http://feedpress.me/candlerblog',

66: 'http://inversesquare.wordpress.com/feed/',

67: 'http://joe-steel.com/feed',

68: 'http://feeds.veritrope.com/',

69: 'https://with.thegra.in/feed',

70: 'http://xkcd.com/atom.xml',

71: 'http://doingthatwrong.com/?format=rss']

72:

73: # Feedparser filters out certain tags and eliminates them from the

74: # parsed version of a feed. This is particularly troublesome with

75: # embedded videos. This can be fixed by changing how the filter

76: # works. The following is based these tips:

77: #

78: # http://rumproarious.com/2010/05/07/\

79: # universal-feed-parser-is-awesome-except-for-embedded-videos/

80: #

81: # http://stackoverflow.com/questions/30353531/\

82: # python-rss-feedparser-cant-parse-description-correctly

83: #

84: # There is some danger here, as the included elements may contain

85: # malicious code.

86: fp._HTMLSanitizer.acceptable_elements |= {'object', 'embed', 'iframe'}

87:

88: # Connect to the database of read posts.

89: db = sqlite3.connect('/path/to/read-feeds.db')

90: query = 'select * from items where blog=? and id=?'

91:

92: # Collect all unread posts and put them in a list of tuples. The items

93: # in each tuple are when, blog, title, link, body, n, and author.

94: posts = []

95: n = 0

96:

97: # We're not going to accept items that are more than 3 days old, even

98: # if they aren't in the database of read items. These typically come up

99: # when someone does a reset of some sort on their blog and regenerates

100: # a feed with old posts that aren't in the database or posts that are

101: # in the database but have different IDs.

102: utc = pytz.utc

103: homeTZ = pytz.timezone('US/Central')

104: daysago = datetime.today() - timedelta(days=3)

105: daysago = utc.localize(daysago)

106:

107: # Start with the JSON feeds.

108: for s in jsonsubscriptions:

109: try:

110: feed = urllib2.urlopen(s).read()

111: jfeed = json.loads(feed)

112: blog = jfeed['title']

113: for i in jfeed['items']:

114: try:

115: id = i['id']

116: except KeyError:

117: id = i['url']

118:

119: # Add item only if it hasn't been read.

120: match = db.execute(query, (blog, id)).fetchone()

121: if not match:

122: try:

123: when = i['date_published']

124: except KeyError:

125: when = i['date_modified']

126: when = dp.parse(when)

127: when = utc.localize(when)

128:

129: try:

130: author = ' ({})'.format(i['author']['name'])

131: except KeyError:

132: author = ''

133: try:

134: title = i['title']

135: except KeyError:

136: title = blog

137: link = i['url']

138: body = i['content_html']

139:

140: # Include only posts that are less than 3 days old. Add older posts

141: # to the read database.

142: if when > daysago:

143: posts.append((when, blog, title, link, body, "{:04d}".format(n), author, id))

144: n += 1

145: else:

146: addItem(db, blog, id)

147: except:

148: pass

149:

150: # Add the RSS/Atom feeds.

151: for s in xmlsubscriptions:

152: try:

153: f = fp.parse(s)

154: try:

155: blog = f['feed']['title']

156: except KeyError:

157: blog = "---"

158: for e in f['entries']:

159: try:

160: id = e['id']

161: if id == '':

162: id = e['link']

163: except KeyError:

164: id = e['link']

165:

166: # Add item only if it hasn't been read.

167: match = db.execute(query, (blog, id)).fetchone()

168: if not match:

169:

170: try:

171: when = e['published_parsed']

172: except KeyError:

173: when = e['updated_parsed']

174: when = datetime(*when[:6])

175: when = utc.localize(when)

176:

177: try:

178: title = e['title']

179: except KeyError:

180: title = blog

181: try:

182: author = " ({})".format(e['authors'][0]['name'])

183: except KeyError:

184: author = ""

185: try:

186: body = e['content'][0]['value']

187: except KeyError:

188: body = e['summary']

189: link = e['link']

190:

191: # Include only posts that are less than 3 days old. Add older posts

192: # to the read database.

193: if when > daysago:

194: posts.append((when, blog, title, link, body, "{:04d}".format(n), author, id))

195: n += 1

196: else:

197: addItem(db, blog, id)

198: except:

199: pass

200:

201: # Sort the posts in reverse chronological order.

202: posts.sort()

203: posts.reverse()

204: toclinks = defaultdict(list)

205: for p in posts:

206: toclinks[p[1]].append((p[2], p[5]))

207:

208: # Create an HTML list of the posts.

209: listTemplate = '''<li>

210: <p class="title" id="{5}"><a href="{3}">{2}</a></p>

211: <p class="info">{1}{6}<br />{0}</p>

212: <p>{4}</p>

213: <form action="/path/to/addreaditem.py" method="post" name="readform{5}" onsubmit="return markAsRead(this);">

214: <input type="hidden" name="blog" value="{8}" />

215: <input type="hidden" name="id" value="{9}" />

216: <input class="mark-button" type="submit" value="Mark as read" name="readbutton{5}"/>

217: </form>

218: <br />

219: <form action="/path/to/addpinboarditem.py" method="post" name="pbform{5}" onsubmit="return addToPinboard(this);">

220: <input type="hidden" name="url" value="{11}" />

221: <input type="hidden" name="title" value="{10}" />

222: <input class="pinboard-field" type="text" name="tags" size="30" /><br />

223: <input class="pinboard-button" type="submit" value="Pinboard" name="pbbutton{5}" />

224: </form>

225: </li>'''

226: litems = []

227: for p in posts:

228: q = [ x.encode('utf8') for x in p[1:] ]

229: timestamp = p[0].astimezone(homeTZ)

230: q.insert(0, timestamp.strftime('%b %d, %Y %I:%M %p'))

231: q += [urllib.quote_plus(q[1]),

232: urllib.quote_plus(q[7]),

233: urllib.quote_plus(q[2]),

234: urllib.quote_plus(q[3])]

235: litems.append(listTemplate.format(*q))

236: body = '\n<hr />\n'.join(litems)

237:

238: # Create a table of contents organized by blog.

239: tocTemplate = '''<li class="toctitle"><a href="#{1}">{0}</a></li>\n'''

240: toc = ''

241: blogs = toclinks.keys()

242: blogs.sort()

243: for b in blogs:

244: toc += '''<p class="tocblog">{0}</p>

245: <ul class="rss">

246: '''.format(b.encode('utf8'))

247: for p in toclinks[b]:

248: q = [ x.encode('utf8') for x in p ]

249: toc += tocTemplate.format(*q)

250: toc += '</ul>\n'

251:

252: # Print the HTMl.

253: print '''<html>

254: <meta charset="UTF-8" />

255: <meta name="viewport" content="width=device-width" />

256: <head>

257: <style>

258: body {{

259: background-color: #555;

260: width: 750px;

261: margin-top: 0;

262: margin-left: auto;

263: margin-right: auto;

264: padding-top: 0;

265: font-family: Georgia, Serif;

266: }}

267: h1, h2, h3, h4, h5, h6 {{

268: font-family: Helvetica, Sans-serif;

269: }}

270: h1 {{

271: font-size: 110%;

272: }}

273: h2 {{

274: font-size: 105%;

275: }}

276: h3, h4, h5, h6 {{

277: font-size: 100%;

278: }}

279: .content {{

280: padding-top: 1em;

281: background-color: white;

282: }}

283: .rss {{

284: list-style-type: none;

285: margin: 0;

286: padding: .5em 1em 1em 1.5em;

287: background-color: white;

288: }}

289: .rss li {{

290: margin-left: -.5em;

291: line-height: 1.4;

292: }}

293: .rss li pre {{

294: overflow: auto;

295: }}

296: .rss li p {{

297: overflow-wrap: break-word;

298: word-wrap: break-word;

299: word-break: break-word;

300: -webkit-hyphens: auto;

301: hyphens: auto;

302: }}

303: .rss li figure {{

304: -webkit-margin-before: 0;

305: -webkit-margin-after: 0;

306: -webkit-margin-start: 0;

307: -webkit-margin-end: 0;

308: }}

309: .title {{

310: font-weight: bold;

311: font-family: Helvetica, Sans-serif;

312: font-size: 120%;

313: margin-bottom: .25em;

314: }}

315: .title a {{

316: text-decoration: none;

317: color: black;

318: }}

319: .info {{

320: font-size: 85%;

321: margin-top: 0;

322: margin-left: .5em;

323: }}

324: .tocblog {{

325: font-weight: bold;

326: font-family: Helvetica, Sans-serif;

327: font-size: 100%;

328: margin-top: .25em;

329: margin-bottom: 0;

330: }}

331: .toctitle {{

332: font-weight: medium;

333: font-family: Helvetica, Sans-serif;

334: font-size: 100%;

335: padding-left: .75em;

336: text-indent: -.75em;

337: margin-bottom: 0;

338: }}

339: .toctitle a {{

340: text-decoration: none;

341: color: black;

342: }}

343: .tocinfo {{

344: font-size: 75%;

345: margin-top: 0;

346: margin-left: .5em;

347: }}

348: img, embed, iframe, object {{

349: max-width: 700px;

350: }}

351: .mark-button {{

352: width: 15em;

353: border: none;

354: border-radius: 4px;

355: color: black;

356: background-color: #B3FFB2;

357: text-align: center;

358: padding: .25em 0 .25em 0;

359: font-weight: bold;

360: font-size: 1em;

361: }}

362: .pinboard-button {{

363: width: 7em;

364: border: none;

365: border-radius: 4px;

366: color: black;

367: background-color: #B3FFB2;

368: text-align: center;

369: padding: .25em 0 .25em 0;

370: font-weight: bold;

371: font-size: 1em;

372: margin-left: 11em;

373: }}

374: .pinboard-field {{

375: font-size: 1em;

376: font-family: Helvetica, Sans-serif;

377: }}

378:

379: @media only screen

380: and (max-width: 667px)

381: and (-webkit-device-pixel-ratio: 2)

382: and (orientation: portrait) {{

383: body {{

384: font-size: 200%;

385: width: 640px;

386: background-color: white;

387: }}

388: .rss li {{

389: line-height: normal;

390: }}

391: img, embed, iframe, object {{

392: max-width: 550px;

393: }}

394: }}

395: @media only screen

396: and (min-width: 668px)

397: and (-webkit-device-pixel-ratio: 2) {{

398: body {{

399: font-size: 150%;

400: width: 800px;

401: background-color: #555;

402: }}

403: .rss li {{

404: line-height: normal;

405: }}

406: img, embed, iframe, object {{

407: max-width: 700px;

408: }}

409: }}

410: </style>

411:

412: <script language=javascript type="text/javascript">

413: function markAsRead(theForm) {{

414: var mark = new XMLHttpRequest();

415: mark.open(theForm.method, theForm.action, true);

416: mark.send(new FormData(theForm));

417: mark.onreadystatechange = function() {{

418: if (mark.readyState == 4 && mark.status == 200) {{

419: var buttonName = theForm.name.replace("readform", "readbutton");

420: var theButton = document.getElementsByName(buttonName)[0];

421: theButton.value = "Marked!";

422: theButton.style.backgroundColor = "#FFB2B2";

423: }}

424: }}

425: return false;

426: }}

427:

428: function addToPinboard(theForm) {{

429: var mark = new XMLHttpRequest();

430: mark.open(theForm.method, theForm.action, true);

431: mark.send(new FormData(theForm));

432: mark.onreadystatechange = function() {{

433: if (mark.readyState == 4 && mark.status == 200) {{

434: var buttonName = theForm.name.replace("pbform", "pbbutton");

435: var theButton = document.getElementsByName(buttonName)[0];

436: theButton.value = "Saved!";

437: theButton.style.backgroundColor = "#FFB2B2";

438: }}

439: }}

440: return false;

441: }}

442:

443: </script>

444:

445: <title>Today’s RSS</title>

446: </head>

447: <body>

448: <div class="content">

449: <ul class="rss">

450: {}

451: </ul>

452: <hr />

453: <a name="start" />

454: <ul class="rss">

455: {}

456: </ul>

457: </div>

458: </body>

459: </html>

460: '''.format(toc, body)

For me, this is a very long script, but most of it is just the HTML template. What getfeeds does is go through my subscription list, gather all the articles from those feeds that I haven’t already read, and generate a static HTML file with the unread articles laid out in reverse chronological order. At the end of each article, it puts a button to mark the article as read and a form for adding a link to the article to my account at Pinboard.

Start by noticing that this is a Python 2 script, so Line 2 is a comment that tells Python that UTF-8 characters will be in the source code. We’ll also run into decode/encode invocations that wouldn’t be necessary if I’d written this in Python 3. I suppose I’ll translate it at some point.

Lines 16–19 are a function for adding an article to the database of read items. This is an SQLite database that’s also kept on the server. The database has a single table whose schema consists of just two fields: the blog name and the article GUID. Each article that I’ve marked as read gets entered as a new record in the database. The addItem function runs a simple SQL insertion command via Python’s sqlite3 library.

Lines 21–27 and 29–71 define my subscriptions: two lists of feed URLs, one for JSON feeds and the other for traditional RSS/Atom feeds. A lot of these feeds have gone silent over the past year, but I remain subscribed to them in the hope that they’ll come back to life.

Line 86 sets a parameter in the feedparser library that relaxes some of the filtering that library does by default. There is some danger to this, but I’ve found that some blogs are essentially worthless if I don’t do this. The comments above Line 86 contain links to discussions of feedparser’s filtering.

Lines 89–90 connect to the database of read items (note the fake path to the database file) and create a query string that we’ll use later to determine whether an article is in the database.

Lines 94–95 initialize the list of posts that will ultimately be turned into the HTML page and the n variable that keeps track of the post count.

Lines 102–105 initialize a set of variables used to handle timezone information and the filtering of older articles that aren’t in the database of read items. As discussed in the comments above Line 102 and in my previous post, old articles that aren’t in the database can sometimes appear in a blog’s RSS feed when the blog gets updated.

Lines 108–148 assemble the unread articles from the JSON feeds. For each subscription, the feed is downloaded, converted into a dictionary, and run through to extract information on each article. Articles that are in the database of read items are ignored (Lines 120-121). Articles that aren’t in the database are appended to the posts list, unless they’re more than three days old, in which case they are added to the database of read items instead of to posts (Lines 142–146).

Much of Lines 108–148 is devoted to error handling and the normalization of disparate input into a uniform output. Each item of the posts list is a tuple with

- the article date,

- the blog name,

- the article title,

- the article URL,

- the article content,

- the running count of posts,

- the article author, and

- the article GUID.

Lines 151–199 do for RSS/Atom feeds what Lines 108–148 do for JSON feeds. The main difference is that the feedparser library is used to download and convert the feed into a dictionary.

Lines 202–203 sort the posts in reverse chronological order. This is made easy by my choice to put the article date as the first item in the tuple described above.

Lines 204–206 generate a dictionary of lists of tuples, toclinks, for the HTML page’s table of contents, which appears at the top of the page. A table of contents isn’t really necessary, but I like seeing an overview of what’s available before I start reading. The keys of the dictionary are the blog names, and each tuple in the list consists of the article’s title and its number, as given in the running post count, n. The number will be used to create internal links in the HTML page.

From this point on, it’s all HTML templating. I suppose I could’ve used one of the myriad Python libraries for this, but I didn’t feel like doing the research to figure out which would be best for my needs. The ol’ format command works pretty well.

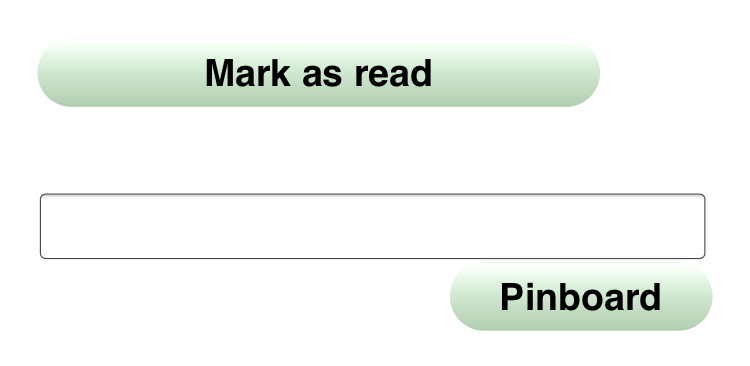

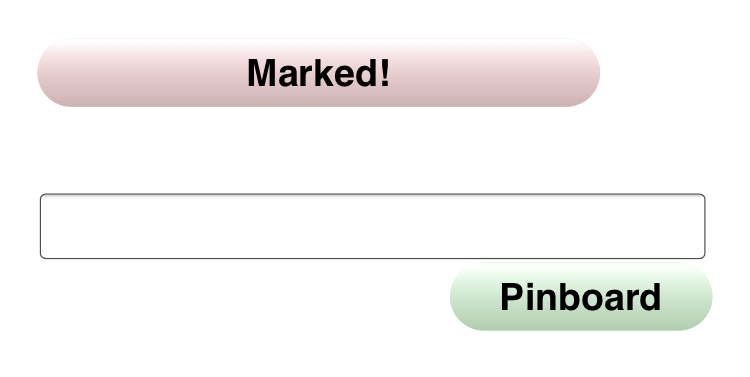

Lines 209–225 define the template for each article. It starts with the title (which links to the original article), the date, and the author. The id attribute in the title provides the internal target for the link in the table of contents. After the post contents come two forms. The first has two hidden fields with the blog name and the article GUID and a visible button that marks the article as read. The second form has the same hidden fields, a visible text field for Pinboard tags, and button to add a link to the original article to my Pinboard list. We’ll see later how these buttons work.

Lines 227–236 concatenate all of the posts, though their template, into one long stretch of HTML that will make up the bulk of the body of the page.

Line 239 defines a template for a table of contents entry (note the internal link), and Lines 240–250 then use that template to assemble the toclinks dictionary into the HTML for the table of contents.

The last piece, Lines 253–460, assembles and outputs the final, full HTML file. It’s as long as it is because I wanted a single, self-contained file with all the CSS and JavaScript in it. I’m sure this doesn’t comport with best practices, but I’ve noticed that best practices in web programming and design change more often than I have time to keep track of. Whenever I need to change something, I know it’ll be here in getfeeds.

The CSS is in Lines 257–410 and is set up to look decent (to me) on my computer, iPad, and iPhone. There’s a lot I don’t know about responsive web design, and I’m sure it shows here.

Lines 412–426 and Lines 428–441 define the markAsRead and addToPinboad JavaScript functions, which are activated by the buttons described above. These are basic AJAX functions that do not rely on any outside library. They’re based on what I read in David Flanagan’s JavaScript: The Definitive Guide and, I suspect, a Stack Overflow page or two that I forgot to preserve the links to. There’s a decent chance they don’t work in Internet Explorer, which I will worry about in the next life.

The markAsRead function triggers this addreaditem.py script on the server:

python:

1: #!/usr/bin/python

2: # coding=utf8

3:

4: import sqlite3

5: import cgi

6: import sys

7: import urllib

8: import cgitb

9:

10: def addItem(db, blog, id):

11: add = 'insert into items (blog, id) values (?, ?)'

12: db.execute(add, (blog, id))

13: db.commit()

14:

15: def markedItem(db, blog, id):

16: check = 'select * from items where blog=? and id=?'

17: return db.execute(check, (blog, id)).fetchone()

18:

19: # Connect to database of read items

20: db = sqlite3.connect('/path-to/read-feeds.db')

21:

22: # Get the item from the request and add it to the database

23: form = cgi.FieldStorage()

24: blog = urllib.unquote_plus(form.getvalue('blog')).decode('utf8')

25: id = urllib.unquote_plus(form.getvalue('id')).decode('utf8')

26: if markedItem(db, blog, id):

27: answer = 'Already marked'

28: else:

29: addItem(db, blog, id)

30: answer = 'OK'

31:

32: minimal='''Content-Type: text/html

33:

34: <html>

35: <head>

36: <title>Add Item</title>

37: <body>

38: <h1>{}</h1>

39: </body>

40: </html>'''.format(answer)

41:

42: print(minimal)

There’s not much to this script. It uses the same addItem function we saw before and a markedItem function uses the same query we saw earlier to check if an item is in the database. Lines 23–30 get the input from the form that called it, check whether that item is already in the database, and add it if it isn’t. There’s some minimal HTML for output, but that’s of no importance. What matters is that if the script returns a success, the markAsRead function changes the color of the button from green to red and the text of the button from “Mark as read” to “Marked!”

Before:

After:

The addToPinboard JavaScript function does essentially the same thing, except it triggers this addpinboarditem.py script on the server:

python:

1: #!/usr/bin/python

2: # coding=utf8

3:

4: import cgi

5: import pinboard

6: import urllib

7:

8: # Pinboard token

9: token = 'myPinboardName:myPinboardToken'

10:

11: # Get the page info from the request

12: form = cgi.FieldStorage()

13: url = urllib.unquote_plus(form.getvalue('url')).decode('utf8')

14: title = urllib.unquote_plus(form.getvalue('title')).decode('utf8')

15: tagstr = urllib.unquote_plus(form.getvalue('tags')).decode('utf8')

16: tags = tagstr.split()

17:

18: # Add the item to Pinboard

19: pb = pinboard.Pinboard(token)

20: result = pb.posts.add(url=url, description=title, tags=tags)

21: if result:

22: answer = "OK"

23: else:

24: answer = "Failed"

25:

26: minimal='''Content-Type: text/html

27:

28: <html>

29: <head>

30: <title>Add To Pinboard</title>

31: <body>

32: <h1>{}</h1>

33: </body>

34: </html>'''.format(answer)

35:

36: print(minimal)

This script uses the Pinboard API to add a link to the original article. Line 9 defines my Pinboard credentials. Lines 12–16 extract the article and tag information from the form. Lines 19–24 connect to Pinboard and add the item to my list. If the script returns a success, the addToPinboard function changes the color of the button from green to red and the text of the button from “Pinboard” to “Saved!”

Before:

After:

The overall system is controlled by this short shell script, runrss.sh:

bash:

1: #!/bin/bash

2:

3: /path/to/getfeeds > /other/path/to/rsspage-tmp.html

4: cd /other/path/to

5: mv rsspage-tmp.html rsspage.html

Line 3 runs the getfeeds script, sending the HTML output to a temporary file. Line 4 then changes to the directory that contains the temporary file, and Line 5 renames it. The file I direct my browser to is rsspage.html. This seeming extra step with the temporary file is there because the getfeeds script takes several seconds to run, and if I sent its output directly to rsspage.html, that file would be in a weird state during that run time. I don’t want to browse the page when it isn’t finished.

Finally, runrss.sh is executed periodically throughout the day by cron. The crontab entry is

*/20 0,6-23 * * * /path/to/runrss.sh

This runs the script every 20 minutes from 6:00 am through midnight every day.

So that’s it. Three Python scripts, one of which is long but mostly HTML templating, a short shell script, and a crontab entry. Was it easier to do this than set up a Feedbin (or whatever) account? Of course not. But I won’t have to worry if I see that Feedbin’s owners have written a Medium post.