Commenting on Shortcuts, Part 2

January 26, 2020 at 12:40 PM by Dr. Drang

In yesterday’s post, I showed how I use my splitflow script to help me explain the workings of whatever shortcuts I write about here. Today, we’ll go through splitflow itself to see how it works.

Let’s start with the code itself:

python:

1: #!/usr/bin/env -S ${HOME}/opt/anaconda3/envs/py35/bin/python

2:

3: import cv2

4: import numpy as np

5: from datetime import date

6: from docopt import docopt

7: from urllib.parse import quote

8: import sys

9:

10: usage = """Usage:

11: splitflow [-dt sss] FILE...

12:

13: Split out the individual steps from a series of screenshots of

14: Shortcuts and output a <table> for inserting into a blog post.

15:

16: Options:

17: -d Do NOT add the date to the beginning of the filename

18: -t sss Add an overall title to the output filenames

19: -h Show this help message and exit

20: """

21:

22: # Handle arguments

23: args = docopt(usage)

24: sNames = args['FILE'] # the screenshot filenames

25: namePrefix = args['-t'] + ' ' if args['-t'] else ''

26: if args['-d']:

27: datePrefix = ''

28: else:

29: today = date.today()

30: datePrefix = today.strftime('%Y%m%d-')

31:

32: # There are three template images we want to search for

33: accept = cv2.imread("accepts.png")

34: topAction = cv2.imread("topAction.png")

35: botAction = cv2.imread("botAction.png")

36:

37: # Template dimensions we'll use later

38: acceptHeight = accept.shape[0]

39: bActHeight = botAction.shape[0]

40:

41: # The threshold for matches was determined through trial and error

42: threshold = 0.99998

43:

44: # Keep track of the step numbers as we go through the files

45: n = 1

46:

47: # Set the URL path prefix

48: URLPrefix = 'https://leancrew.com/all-this/images2020/'

49:

50: # Start assembling the table HTML

51: table = []

52: table.append('<table width="100%">')

53: table.append('<tr><th>Step</th><th>Action</th><th>Comment</th></tr>')

54:

55: rowTemplate = '''<tr>

56: <td>{0}</td>

57: <td width="50%"><img width="100%" src="{1}{2}" alt="{3}" title="{3}" /></td>

58: <td>Step_{0}_comment</td>

59: </tr>'''

60:

61: # Loop through all the screenshot images

62: for sName in sNames:

63: sys.stderr.write(sName + '\n')

64: whole = cv2.imread(sName)

65: width = whole.shape[1]

66:

67: # Find the Accepts box, if there is one

68: aMatch = cv2.matchTemplate(whole, accept, cv2.TM_CCORR_NORMED)

69: minScore, maxScore, minLoc, (left, top) = cv2.minMaxLoc(aMatch)

70: if maxScore >= threshold:

71: bottom = top + acceptHeight

72: box = whole[top:bottom, :, :]

73: desc = '{}Step 00'.format(namePrefix)

74: fname = '{}{}Step 00.png'.format(datePrefix, namePrefix)

75: cv2.imwrite(fname, box)

76: table.append(rowTemplate.format(0, URLPrefix, quote(fname), desc))

77: sys.stderr.write(' ' + fname + '\n')

78:

79: # Set up all the top and bottom corner action matches

80: tMatch = cv2.matchTemplate(whole, topAction, cv2.TM_CCORR_NORMED)

81: bMatch = cv2.matchTemplate(whole, botAction, cv2.TM_CCORR_NORMED)

82: actionTops = np.where(tMatch >= threshold)[0]

83: actionBottoms = np.where(bMatch >= threshold)[0]

84:

85: # Adjust the coordinate lists so they don't include partial steps

86: # If the highest bottom is above the highest top, get rid of it

87: if actionBottoms[0] < actionTops[0]:

88: actionBottoms = actionBottoms[1:]

89:

90: # If the lowest top is below the lowest bottom, get rid of it

91: if actionTops[-1] > actionBottoms[-1]:

92: actionTops = actionTops[:-1]

93:

94: # Save the steps

95: for i, (top, bot) in enumerate(zip(actionTops, actionBottoms)):

96: step = n + i

97: bottom = bot + bActHeight

98: box = whole[top:bottom, :, :]

99: desc = '{}Step {:02d}'.format(namePrefix, step)

100: fname = '{}{}Step {:02d}.png'.format(datePrefix, namePrefix, step)

101: cv2.imwrite(fname, box)

102: table.append(rowTemplate.format(step, URLPrefix, quote(fname), desc))

103: sys.stderr.write(' ' + fname + '\n')

104:

105: # Increment the starting number for the next set of steps

106: n = step + 1

107:

108: sys.stderr.write('\n')

109:

110: # End the table HTML and print it

111: table.append('</table>')

112: print('\n'.join(table))

It’s reasonably well commented, I think, but there can always be further explanation.

The first thing you probably notice is the weird shebang line at the top. Normally, that would be simply

#!/usr/bin/env python

to have the script run by my default Python installation. That’s currently a Python 3.7 system that I installed through Anaconda, which is a really good system for getting you away from the outdated version of Python that comes with macOS and for managing different Python “environments.” Unfortunately, the key module needed for splitflow is the OpenCV module, and Anaconda currently doesn’t have an OpenCV module that works with Python 3.7. It does, however, have an OpenCV module that’s compatible with Python 3.5, so I installed a Python 3.5 environment in order to run splitflow. Because it’s Python 3.5 is not my default Python, I have to tell the shebang line explicitly where it is. The -S option tells env to interpret environment variables, and that combined with

${HOME}/opt/anaconda3/envs/py35/bin/python

point the script to the Python 3.5 interpreter.1

Of the modules imported in Lines 3–8, only cv2, which is the OpenCV module, and docopt, which I learned about from Rob Wells, aren’t in the standard library. I installed both of them via Anaconda.

The usage string that starts on Line 10 is what’s shown when you invoke splitflow with the -h option. It describes how the command works and, through the magic of docopt tells the script how to handle its command line options.

The command-line parsing is done by docopt in Lines 23–30. Three variables get defined here for later use:

sNames, a list of all the files passed as arguments tosplitflow.namePrefix, the title portion of the name given to each of the image files created by the script. Ifsplitflowis called with no-toption, there’s no such string in the filename.datePrefix, theyyyymmddstring that I usually prepend to every image file I use here on the blog. The-doption turns this prefix off.

Lines 33-35 read in the template files that splitflow searches for in the screenshots. We saw them yesterday.

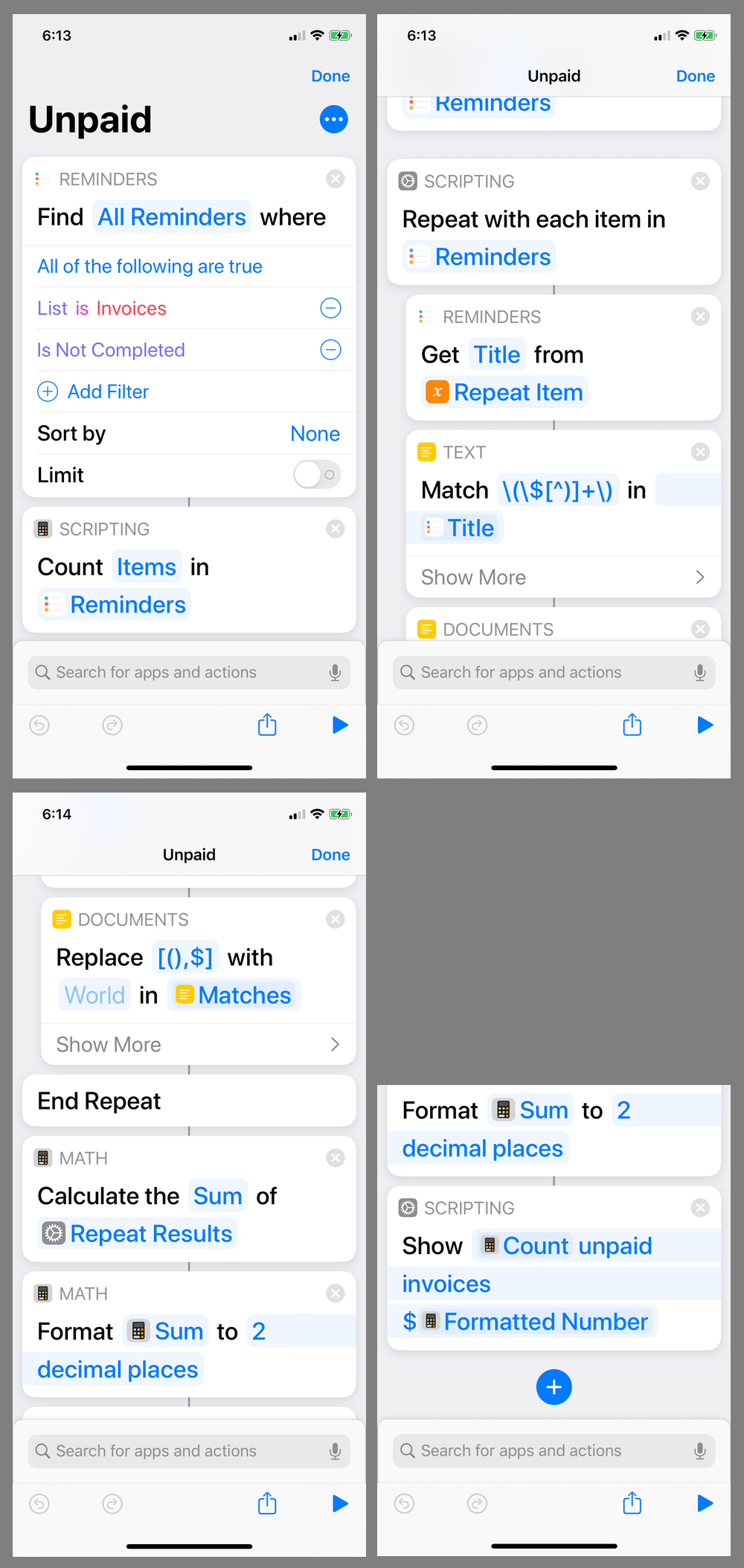

The image on the left is accepts.png, the one in the center is topAction.png, and the one on the right is botAction.png. At present, I use a particular directory for running splitflow. This directory is where the template image files are kept and where I save the screenshot files. I cd to this directory, run splitflow there, and that’s where the individual step image files are saved. This is not a great system, as it keeps these permanent template files in the same place as the temporary images I’m processing. At some point, I’ll move the template images to a more protected location, away from where I save the screenshots.

It’s worth pausing here to define exactly what imread does. For color images like we have, it reads the given image file and returns a three-dimensional NumPy array. The first index of the array is for the row; its length is the width of the image. The second index is for the column; its length is the height of the image. The third is for the color values; its length is three (one value each for red, green, and blue). The advantage of using this data structure for an image is that all of the array calculations that NumPy and SciPy provide can now be applied to the image values. That’s how the template images are matched to the screenshots. Also, this allows us to use simple array slicing to copy out parts of an image.

The next few sections define variables that’ll be used later in the script. Lines 38–39 set geometric properties of two of the template images, Line 45 initializes the step number, and Line 48 sets the URL path used in the src attribute of the <img> tags in the table.

The threshold value in Line 42 is a little more complicated. As we’ll see further down, OpenCV has a matchTemplate function that sort of overlays the template onto the target image and runs a calculation to see how well they match. The calculation is run for each possible overlay position and the resulting value is a measure of how good the match is when the template is at that position. The return value of matchTemplate is a two-dimensional array of these goodness-of-match values for each possible overlay position.

OpenCV supports a handful of these goodness-of-match calculations. The one I’ve chosen, a normed cross-correlation, results in a number between 0 and 1. Through trial and error with these template images and a series of screenshots, I learned that 0.99998 was a good threshold value for matching the Accepts box and the top and bottom corners of action steps. Using a smaller threshold resulted in false matches with the template a pixel or two away from its best fit. Using a larger threshold often resulted in no matches.2

Lines 51–53 initialize table, a list variable that will eventually contain all of the lines of the HTML table that will be output. In the old days, Python’s string concatenation routines were inefficient, and Python programmers were encouraged to build up long strings by accumulating all the parts into a list and then joining them. I don’t think that’s still as important as it used to be, but it’s a habit I—and many other Python programmers—still adhere to.

Lines 55–59 define a template string for each row of the table. We’ll use this in a couple of places later in the program.

With Line 62, we’re done with all the setup and will start processing in earnest by looping through the screenshot files in sNames. In a nutshell, the code in this loop does the following:

- Read in a screenshot file and create a NumPy array as described above. (Line 64)

- Look for an Accepts step. If there is one, copy it from the screenshot, save it to a file, and then add a row to the table HTML. (Lines 68–77)

- Find all of the top and bottom left corners of action steps. Get rid of any bottom corner that doesn’t have a corresponding top and any top corner that doesn’t have a corresponding bottom. Then crop the action steps from the screenshot, save them, and add a new row to the table HTML. Increment the step count for each action step found. (Lines 80–106)

You’ll notice that there are some sys.stderr.write lines within the loop. I put these in to show the progress of the script as it runs. For the screenshots processed in yesterday’s post, these lines were printed as splitflow ran:

A1.png

20200126-Unpaid Step 01.png

20200126-Unpaid Step 02.png

A2.png

20200126-Unpaid Step 03.png

20200126-Unpaid Step 04.png

20200126-Unpaid Step 05.png

A3.png

20200126-Unpaid Step 06.png

20200126-Unpaid Step 07.png

20200126-Unpaid Step 08.png

20200126-Unpaid Step 09.png

A4.png

20200126-Unpaid Step 10.png

This works not only as a sort of progress bar, but also as a sanity check. I generally have a decent idea of how many steps are in each of the screenshots, and this shows me whether splitflow is finding them. If we look again at the four screenshots being processed

we see that there are indeed two action steps in the first, three in the second, four in the third, and one in the fourth. I have these progress lines sent to standard error instead of standard output because I don’t want them to interfere with the table HTML. If I want to save the table HTML to a file, I can use this redirection

splitflow -t Unpaid A* > table.html

and the progress lines will be displayed on the Terminal while only the table goes into the file.

In searching for the Accepts box, Line 68 runs the matchTemplate function and puts the array of goodness-of-match values into aMatch. Line 69 then uses minMaxLoc to find the best match. But the best match isn’t necessarily good enough—there will always be a best match, even if there isn’t an Accepts box in the screenshot. So Line 70 acts as a gatekeeper. Only matches that exceed the threshold pass.

If a match passes the gatekeeper, Line 70 uses the height of the Accepts template (saved back on Line 38) and the top value that came out of the minMaxLoc call on Line 69 to calculate the bottom of the Accepts box. Line 72 then uses array slicing to copy the Accepts box out of the screenshot. We then do some simple string processing to get the names right and save the Accepts box as Step 00 in Line 75.

The handling of action steps is similar, except we can’t look for just the best match because there may be more than one. After running matchTemplate against both the top and bottom template images (Lines 80–81), we use the NumPy where function to filter out the goodness-of-match values, retaining only those above the threshold. After Lines 82–83, actionTops contains the vertical coordinate of all the top corners of actions in the screenshot and actionBottoms contains the vertical coordinates of all the bottom corners.3

We then use some simple logic in Lines 85–92 to get rid of partial steps. A bottom corner that’s above the highest top corner must be from a partial step. Similarly for a top corner that’s below the lowest bottom corner. When this is done, actionTops and actionBottoms are lined up—the corresponding elements are the top and bottom coordinates of all the full steps in the screenshot.

Lines 95–103 then loop through these top/bottom pairs and copy the action boxes using the same array slicing logic used with the Accepts box. And the table list gets another row, too.

Finally, with all the step images saved and all the rows added to table, we close out table in Line 111 and print out the HTML in Line 112.

Phew! The overall logic is pretty straightforward, but there are lots of little things that need to be handled correctly.

As I’ve said, this is a new script and is certainly not in its final form. Apart from figuring out where I want to keep the template images, I should add a section that uploads the step images to my server. And there’s some refactoring that could be done; Lines 71–76 are too similar to Lines 97–102.

But even in this early stage, splitflow has been a big help. I like the tabular presentation of shortcuts, but I would never do it if it meant slicing up the screenshots and assembling the table by hand. I’m already thinking about extending it to handle Keyboard Maestro macros.

-

Yes, I know Python is not strictly an interpreter, but it’s easier to treat it as such when discussing shebang lines. ↩

-

When I started writing

splitflow, I thought I could use a threshold of 1. Because both the templates and the screenshots are PNGs, I thought the matches should be exact, pixel for pixel. But I suspect that either the antialiasing around the corner edges or some floating point roundoff during the cross-correlation calculations is preventing a perfect match. ↩ -

Strictly speaking, the vertical coordinates in

actionBottomsare a distancebActHeightabove the bottom corners. We correct for this in Line 97. ↩