Proofreading with ChatGPT

October 12, 2025 at 11:21 AM by Dr. Drang

I was talking with David Sparks and Stephen Hackett recently, and the conversation came around to fixing typos and other errors in our writing. I think the typos and poorly constructed sentences here at ANIAT have been reduced since I started having my Mac read my posts aloud to me.

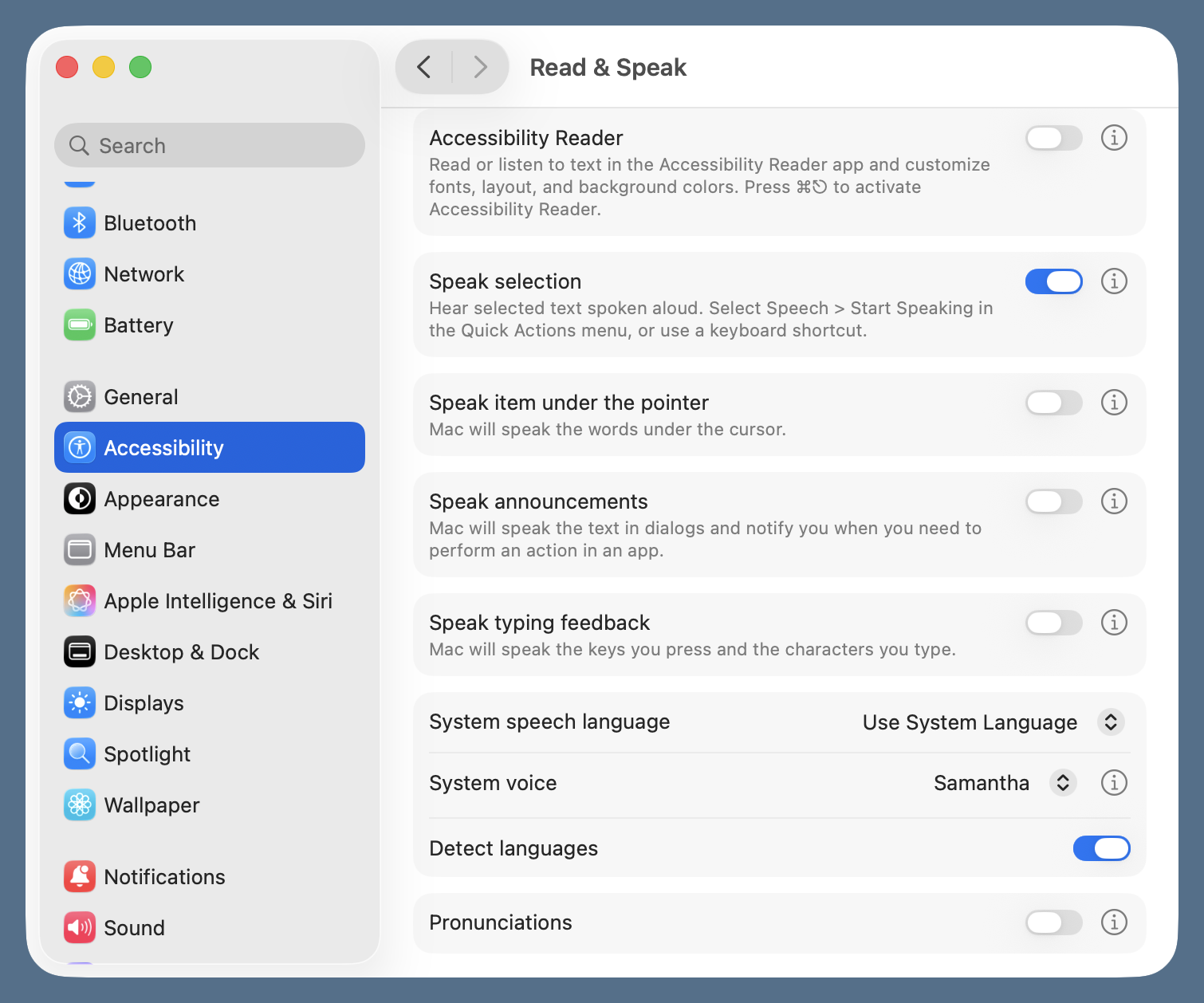

I do this by converting the Markdown source of a post into HTML and previewing it in Safari. Then I go through the post, selecting one paragraph at a time and typing ⌥⎋ (that’s Option-Escape) to speak the selected text. This works because I have the option turned on in the section of the Accessibility settings. You might want to read Apple’s instructions for this.

It’s embarrassing how many mistakes I find this way, but what’s more embarrassing is that mistakes still squeak through. David suggested having an LLM read my posts and tell me where it thinks the mistakes are. Make sure, he emphasized, to tell it to just find and report the mistakes—don’t fix them. And because many of my posts contain programs, I should also tell it to ignore sections of code.

So I did just that, albeit for a post I’d already published, and I thought it worthwhile to go through the results.

I meant to use both ChatGPT and Claude, but I messed up and sent a different (partially corrected) version of the text to Claude, so I’m going to ignore its results.

Here’s the prompt I gave to ChatGPT:

Find typographical errors and grammatical mistakes in the following Markdown text. Do not make any changes, just tell me what you think should be changed. Ignore all embedded URLs and programming code.

The text I gave it was the Markdown source of my most recent post, the one about scoring my incorrect basketball trivia answers by calculating things like its Levenshtein distance from the correct answer. What follows is ChatGPT’s analysis of the text and my comments.

Inline code discrepancy:

You mention the wrong answer asADCBbut later say “you can’t go from ACDB to BDCA.” That looks like a typo (ACDB instead of ADCB).

This is the best of ChatGPT’s findings. In a post that includes a discussion of letter transposition errors, having (non-deliberate) letter transposition errors is a big mistake. After reading this, I searched for ACDB, ADCB, BDCA, and BCDA to find this sort of mistake and make sure my right and wrong answers were always right.

Ellipsis usage:

In “There are words that don’t follow the ‘I before E’ rule—Levenshtein, to pick a random example—”, the em dashes are fine stylistically, but for Markdown consistency you could ensure they are either — or — consistently.

This is inexplicable for a couple of reasons:

- It has nothing to do with ellipses.

- I was consistent in my use of em dashes throughout the post.

To make sure there were no en dashes or hyphens where em dashes should be, I searched the document for them. There were no en dashes whatsoever, and all the hyphens were in either URLs or hyphenated words (like Damerau-Levenshtein).

Although Markdown allows you to use double hyphens to indicate an em dash—following the typewriter tradition I learned over 50 years ago—it doesn’t require you to. I learned how to get en and em dashes via ⌥- and ⇧⌥- from The Mac is Not a Typewriter and have been using those keystrokes ever since.

Misused plural agreement:

“two switches, Majerle/Nash and Chambers/Hawkins would’ve made my answer correct.” → Should be “would have made” (avoid contraction in formal writing).

Again, the heading for this mistake doesn’t seem to match the description. Maybe the subject of the post made ChatGPT think1 this was formal writing. Next time, I’ll point out that this is a blog post and is meant to have a conversational tone.

Comma after introductory clause (minor stylistic):

“Anyway, I got every number wrong, but I noticed that two switches…” → Grammatically fine, though “Anyway” is informal — could be replaced with “In any case,” if desired.

Again, it seems to think this is more formal writing than it is. Also, I believe an introductory “Anyway,” like most introductory words and phrases, should be followed by a comma.

Missing comma before a nonrestrictive clause:

“The Damerau-Levenshtein distance from my answer to the correct one is 3; substitution of the first character, substitution of the last character, and transposition of the two interior characters.” → Could use a colon instead of semicolon before the list: “…is 3: substitution of…”

The description of the typo is correct. I did intend for that to be a colon; my left pinky lifted off the Shift key before my right pinky did its job. But the heading is wrong.

Incorrect use of “Levenshtein calculation” example:

“Since switching letters isn’t a valid edit in a Levenshtein calculation, you can’t go from ACDB to BDCA…” → Again, that appears to be a typo — the example string ACDB should match your earlier ADCB.

This is another transposition error. Here, though, I got the interior letters backwards in both strings—it should be ADCB and BCDA—and ChatGPT found only one of the mistakes.

Missing article:

“We’ve reduced both of these edit distances without really changing the question.” → Could be “…without really changing the nature of the question.” (Optional clarity fix.)

There’s no missing article, so we have another incorrect heading, and I think inserting “the nature” is redundant. To me, my use of “really” indicates that I’m not changing the nature of the question.

Awkward phrase:

“There are a few ways to import functions for Python.” → More idiomatic: “There are a few ways to use these functions in Python” or “to import such functions in Python.”

It wasn’t awkward, it was nonspecific. I meant to say “There are a few ways to import similar functions for Python” and have since added the missing word.

I’m glad ChatGPT pointed me to this sentence, even though it mischaracterized my mistake and gave corrections I didn’t like.

Incorrect pluralization / parallelism:

“Mathematica has the built-in functions [HammingDistance], [EditDistance], and [DamerauLevenshteinDistance]. There are a few ways to import functions for Python.” → Grammatically fine, but the flow is a little inconsistent (switches from “has built-in functions” to “ways to import functions”).

This is the same sentence flagged above. While I appreciate the desire for parallel construction, I can’t use it here. Unlike Mathematica, Python itself doesn’t have built-in edit distance functions—they have to be imported.

Comma splice or fragment:

“Since my answer can be made correct by flipping two pairs of letters, I wondered which of the other derangements can be similarly transformed.” → Fine grammatically, but stylistically “could be similarly transformed” would match past-tense context.

This is not a comma splice, so the header is wrong again. And I don’t see this as having inconsistent tense. To me, “can be made” is not past tense.

Ambiguous reference:

“Because I didn’t try to be clever in setting up the nested loops, the count variable is incremented twice for every pair of characters that can be flipped.” → Grammatically fine, but the pronoun “that” could be “which” for restrictive vs. nonrestrictive correctness.

Flatly wrong. “Can be flipped” absolutely restricts the character pairs being discussed.

Unnecessary comma in bullet:

“CDAB and ABCD, which are one-character rotations…” → The comma after ABCD is optional; can be removed for cleaner reading.

No, the commas are necessary.

Incorrect number in final sentence:

“There’d be 44 derangements in a 5-player question.” → The actual number of derangements for 5 items is 44, so it’s mathematically correct — not an error, just worth double-checking the intent (it matches your topic).

The sentence before the flagged one is: “I’m glad this question had only four players.” Maybe that confused ChatGPT into thinking I meant to get the number of derangements of four items instead of five. Anyway, there are no incorrect numbers in the final sentence.

Stylistic repetition:

The phrase “the correct answer” appears very frequently; while not grammatically incorrect, it may benefit from varied phrasing for readability.

There are two reasons I tend to avoid the varied phrasing ChatGPT wants:

- In my reports for work, using different words for the same thing often led to questions. “You say ‘correct answer’ here but ‘right answer’ over here. Why did you make that distinction?” Consistent phrasing avoided stupid questions like that, and I’ve carried the habit over to my writing here.

- English isn’t the first language of many of my readers. I think sticking with the same words for the same thing is helpful for them.

So was this a success? Yes and no. I definitely found mistakes I didn’t find by listening to the post read aloud, but I also wasted some time looking into “mistakes” that weren’t. And even when ChatGPT pointed me to a sentence with an error, the error wasn’t always what ChatGPT said it was.

Overall, though, the post was improved, so I’m going to give it another try. And I’ll still have my Mac read the text back to me. In addition to finding errors, I often think of different ways to explain things while listening.

-

There are people—Emily Bender being perhaps the most prominent—who get very upset with this language. “LLMs don’t think!” they complain. True enough, but as someone who has spent decades talking about how beams “want” to deflect in a certain way, I find this kind of anthropomorphism harmless. It also makes sentences about LLMs much easier to write. I suppose there is a danger that some will take the word “think” literally, but I have a higher opinion of my readers. ↩