Sitemap evolution

November 21, 2014 at 12:42 AM by Dr. Drang

I don’t know how valuable a sitemap really is, but I decided I might as well have one. When I was running ANIAT on WordPress, the WP system (or maybe it was a plugin) made one for me, but now I need to build one on my own and keep it updated with each new post. I put together a simple system today and figured it was worth sharing how I did it.

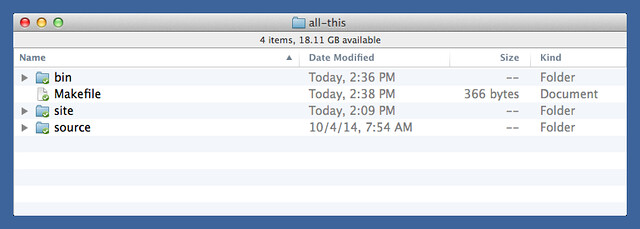

A sitemap is an XML file that is, at its heart, just a list of URLs to all the pages on your site. ANIAT is build from a directory structure that looks like this,

where source is the directory of Markdown source files, site is the directory of HTML and support files that’s mirrored to the server, and bin is the directory of scripts that build the site. The Makefile runs the scripts that build and upload the site.

Starting in the bin directory, I got a list of all the posts with a simple ls command:

ls ../site/*/*/*/index.html

This returned a long list of file paths that looked like this:

../site/2014/11/more-rss-mess/index.html

../site/2014/11/post-post-post/index.html

../site/2014/11/snell-scripting/index.html

../site/2014/11/the-rss-mess/index.html

../site/2014/11/three-prices/index.html

The simplest way to turn these into URLs was by piping them through awk,

ls ../site/*/*/*/index.html | awk -F/ '{printf "http://www.leancrew.com/all-this/%s/%s/%s/\n", $3, $4, $5}'

which returned lines like this:

http://www.leancrew.com/all-this/2014/11/more-rss-mess/

http://www.leancrew.com/all-this/2014/11/post-post-post/

http://www.leancrew.com/all-this/2014/11/snell-scripting/

http://www.leancrew.com/all-this/2014/11/the-rss-mess/

http://www.leancrew.com/all-this/2014/11/three-prices/

The -F/ option told awk to split each line on the slashes, and the $3, $4, and $5 are the third, fourth, and fifth fields of the split line.

With this working, it was easy to beef up the awk command to include the surrounding XML tags:

ls ../site/*/*/*/index.html | awk -F/ '{printf "<url>\n <loc>http://www.leancrew.com/all-this/%s/%s/%s/</loc>\n</url>\n", $3, $4, $5}'

This gave me lines like this:

<url>

<loc>http://www.leancrew.com/all-this/2014/11/more-rss-mess/</loc>

</url>

<url>

<loc>http://www.leancrew.com/all-this/2014/11/post-post-post/</loc>

</url>

<url>

<loc>http://www.leancrew.com/all-this/2014/11/snell-scripting/</loc>

</url>

<url>

<loc>http://www.leancrew.com/all-this/2014/11/the-rss-mess/</loc>

</url>

<url>

<loc>http://www.leancrew.com/all-this/2014/11/three-prices/</loc>

</url>

Now all I needed were a few opening and closing lines, and I’d have a sitemap file with all the required elements.

The awk commands were growing too unwieldy to maintain as a one-liner, so I put them in a script file and added the necessary parts to the beginning and the end.

1: #!/usr/bin/awk -F/ -f

2:

3: BEGIN {

4: print "<?xml version=\"1.0\" encoding=\"UTF-8\"?>\n<urlset xmlns=\"http://www.sitemaps.org/schemas/sitemap/0.9\">\n<url>\n <loc>http://www.leancrew.com/all-this/</loc>\n</url>"

5: }

6: {

7: printf "<url>\n <loc>http://www.leancrew.com/all-this/%s/%s/%s/</loc>\n</url>\n", $3, $4, $5

8: }

9: END {

10: print "</urlset>"

11: }

With this script, called buildSitemap, I could run

ls ../site/*/*/*/index.html | ./buildSitemap > ../site/sitemap.xml

and generate a sitemap.xml file in the site directory, ready to be uploaded to the server. The file looked like this:

<?xml version="1.0" encoding="UTF-8"?>

<urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">

<url>

<loc>http://www.leancrew.com/all-this/</loc>

</url>

.

.

.

<url>

<loc>http://www.leancrew.com/all-this/2014/11/the-rss-mess/</loc>

</url>

<url>

<loc>http://www.leancrew.com/all-this/2014/11/three-prices/</loc>

</url>

</urlset>

This was the output I wanted, but the awk script looked ridiculous, and if I wanted to update script to add <lastmod> elements to each URL, it was just going to get worse. I’m not good enough at awk to make a clean script that’s easy to maintain and improve. So I rewrote buildSitemap in Python:

python:

1: #!/usr/bin/python

2:

3: import sys

4:

5: print '''<?xml version="1.0" encoding="UTF-8"?>

6: <urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">

7: <url>

8: <loc>http://www.leancrew.com/all-this/</loc>

9: </url>'''

10:

11: for f in sys.stdin:

12: parts = f.split('/')

13: print '''<url>

14: <loc>http://www.leancrew.com/all-this/{}/{}/{}/</loc>

15: </url>'''.format(*parts[2:5])

16:

17: print '</urlset>'

It’s a little longer, but for me it’s much easier to understand at a glance. In fact, it was fairly easy to figure out how to add <lastmod> elements:

python:

1: #!/usr/bin/python

2:

3: import sys

4: import os.path

5: import time

6:

7: lastmod = time.strftime('%Y-%m-%d', time.localtime())

8: print '''<?xml version="1.0" encoding="UTF-8"?>

9: <urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">

10: <url>

11: <loc>http://www.leancrew.com/all-this/</loc>

12: <lastmod>{}</lastmod>

13: </url>'''.format(lastmod)

14:

15: for f in sys.stdin:

16: parts = f.split('/')

17: mtime = time.localtime(os.path.getmtime(f.rstrip()))

18: lastmod = time.strftime('%Y-%m-%d', mtime)

19: print '''<url>

20: <loc>http://www.leancrew.com/all-this/{1}/{2}/{3}/</loc>

21: <lastmod>{0}</lastmod>

22: </url>'''.format(lastmod, *parts[2:5])

23:

24: print '</urlset>'

The rstrip() in Line 17 gets rid of the trailing newline of f, and os.path.getmtime returns the modification time of the file in seconds since the Unix epoch. This is converted to a struct_time by time.localtime() and to a formatted date string in Line 18 by time.strftime(). The same idea is used in Line 7 to get the datestamp for the home page, but I cheat a bit by just using the current time, which is what time.localtime() returns when it’s given no argument.

Now the same pipeline,

ls ../site/*/*/*/index.html | ./buildSitemap > ../site/sitemap.xml

gives me a sitemap with more information, built from a script that’s easier to read. I’ve added this line to one of the Makefile recipes in bin. When I add or update a post, the sitemap gets built and is then mirrored to the server.

You could argue that the time I spent using awk was wasted, but I don’t see it that way. It was a quick way to get preliminary results directly from the command line, and in doing so I learned what I needed in the final script. If I were to do it over again, I’d still use awk in the early stages, but I’d shift to Python one step earlier. The awk script was a mistake, and I should have recognized that as soon as the BEGIN clause got unwieldy.

Update 11/21/14 10:29 PM

As Conrad O’Connell pointed out on Twitter,

@drdrang the sitemap file doesn’t do you much good unless you add it to your robots.txt file

Re: leancrew.com/all-this/2014/…

— Conrad O’Connell (@conradoconnell) Nov 21 2014 5:13 PM

you do need to let the search engines know that you have a sitemap and where it is. The various ways you can do that are given on the sitemaps.org protocol page. I used this site to ping the search engines after validating my sitemap, but I agree with Conrad that adding a

Sitemap: http://path/to/sitemap.xml

line to the robots.txt file is probably a better idea because it doesn’t require you to know which search tools use sitemaps.