A little data munging

July 24, 2016 at 8:45 PM by Dr. Drang

For the past couple of weeks, I’ve been doing analyses of other people’s data. Usually, the data comes in the form of a spreadsheet, which works out fine. I don’t like using spreadsheets myself, but it’s easy to extract the data and put it in a CSV or TSV file, which I can then manipulate in Python.

Sometimes, though, what I get is a PDF of someone else’s report, with the data in one or more tables. This is more challenging. If the PDF was generated directly from the writer’s word processor, the text in the tables is selectable, and I can copy and paste it into a plain text document. Unfortunately, the columns often aren’t delimited the way I’d like.

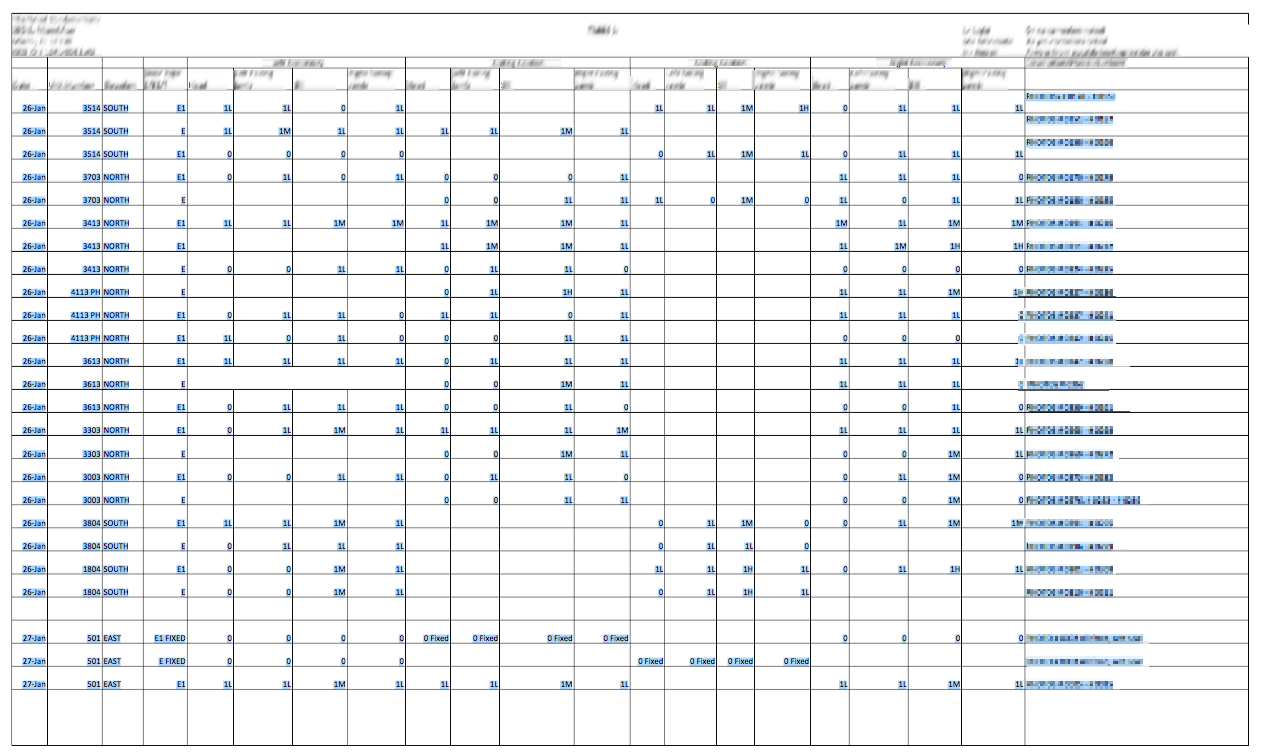

Here’s part of a multipage table I had to analyze. It has 21 columns and, after combining all the pages, about 100 rows. I opened the PDF in Preview, selected the text, and copied it.

I crossed my fingers and pasted it into a new BBEdit document. The columns were lost entirely; every table cell appeared on its own line. But luckily there was order to it, as the data came in row by row. In other words, something like this

| Col 1 | Col 2 | Col 3 | Col 4 | Col 5 |

|---|---|---|---|---|

| a | b | c | d | e |

| f | g | h | i | j |

| k | l | m | n | o |

was turned into this (I didn’t select the column headings because I didn’t like the names given in the table).

a

b

c

d

e

f

g

h

i

j

k

l

m

n

o

At least it would have turned into that if it weren’t for the missing data. If you look back at the image of the table, you’ll see that lots of cells are empty. What that meant was the table looked more like this

| Col 1 | Col 2 | Col 3 | Col 4 | Col 5 |

|---|---|---|---|---|

| a | b | e | ||

| g | h | |||

| k | n | o |

and turned into this after copying and pasting into BBEdit.

a

b

e

g

h

k

n

o

I couldn’t think of an automated way to add the missing lines, so I did it by hand, adding empty lines to the BBEdit document where needed.

a

b

e

g

h

k

n

o

While I’m pretty sure pandas has a way of reading a file like this and putting it into a data frame, I’m not fluent enough in pandas to know how to do it without poring over the documentation. And because I’d just spent a good chunk of time adding blank lines to the file, I wanted to get the analysis going right away. So I wrote this little script to read in the text file and spit out a 21-column TSV.

python:

1: #!/usr/bin/env python

2:

3: from sys import stdout

4:

5: with open('table.txt') as f:

6: a = f.readlines()

7:

8: for i, b in enumerate(a):

9: if (i + 1) % 21 > 0:

10: stdout.write(b.replace('\n', '\t'))

11: else:

12: stdout.write(b)

The rows are 21 items long, so we want to turn the line feeds into tab characters except every 21st one. The only tricky part is recognizing that the array of lines, a, is 0-indexed, and the modulo operator used to determine where a row stops must be applied to line numbers that start with 1, not 0. Hence the (i + 1) % 21 in Line 9. After running the script, I added a row of column names to the top of the file and had a nice TSV file for importing.

This script is, I realize, both longer than necessary and uses more memory than necessary. Maybe I should’ve used a Perl one-liner:

perl -ne 'chomp; if ($.%21) {print "$_\t"} else {print "$_\n"}' table.txt

This doesn’t slurp in the entire file, and because the line number variable, $., starts counting at 1, there’s no off-by-one trickiness to worry about. An awk one-liner would be similar.

But I wasn’t in a Perl or awk frame of mind when I did this. I’d been working in Python, so that’s what I used. And there are better ways of writing this in Python, too. As I sit here drinking tea and not trying to finish a paying job, I imagine the fileinput module would be useful.

python:

1: #!/usr/bin/env python

2:

3: from fileinput import input, lineno

4: from sys import stdout, argv

5:

6: columns = int(argv[1])

7: del argv[1]

8: for line in input():

9: if lineno() % columns:

10: stdout.write(line.replace('\n', '\t'))

11: else:

12: stdout.write(line)

That gets rid of both the slurping and the off-by-one correction. And it doesn’t have the name of the file or the number of columns in the table hard-wired into the code. It can be called this way:

shape-table 21 table.txt

Now I have something I can use again the next time I need to extract a table of data from a PDF. Which pretty much guarantees I’ll never run into this kind of problem again.