Building a comic book inventory

December 30, 2018 at 8:16 AM by Dr. Drang

For a couple of years in the mid-70s, I collected comic books. This sounds more organized than it was. What I really did was buy and read comic books, which I then stacked up on bookshelves or other flat surfaces. In the late 70s, when I went away to college, I bought the then-obligatory stereo system with big speakers that came in boxes, 12″×14″×25″ high—the perfect size for storing my comics. They all went into a single box that I have managed to maintain and keep with me for the past 40 years.

In the mid-80s, I bought a computer, and my thoughts turned to making a database of my comics. Wouldn’t it be nice, I asked myself, to have not simply a list of titles and issue numbers—which I had written on sheets of paper or index cards—but a real comprehensive catalog of artists, writers, and storylines? It’s a question I’ve continued to ask myself off and on over the ensuing 30+ years. The answer, of course, was yes, that would be nice; but turning that answer into a database required an effort I was never able to muster. Until this past week, when I took advantage of the usual holiday slowdown at work to sit my butt down at my computer and build—at the age of 58—a database of my teenaged purchases.

As you might expect, my work consisted mostly of writing little scripts that collected, organized, and assembled the data. I’ll describe those scripts in a later post, but here I want to talk in more general terms about the overall process, the obstacles I encountered, and the dead-end paths I traveled down.

First, let’s talk about the size and nature of my collection. Back when one of the Guardians of the Galaxy movies came out, I had pulled several issues of Defenders and Marvel Presents1 out of the box, and they were still stacked on a bookshelf. Measuring the height of that stack led me to guess that the density of the box was about 10–12 issues per inch of height. So maybe 250–300 comic books in my collection. This turned out to be a significant underestimate, probably because the books in the box are squeezed more tightly together by the weight of the overburden.2

As for the nature of my collection, it’s basically all Marvel. I knew I had a handful (four, as it turned out) of non-Marvel titles, including a Jack Kirby Sandman, but my database wouldn’t need too much adjustment if I initially treated it as 100% Marvel. So my initial plan was to work through my collection and use the Marvel Developer Portal to gather all the publication information and credits for each issue through the Marvel API.

Marvel has an interactive API tester with documentation that lets you try out calls to collect info on series, creators, series, and individual comics. Although I intended to write scripts to do all the searching, I started off using the interactive tester to make sure I understood the API. With my issues of Marvel Presents at my side, I started exploring.

And got nothing. Every search I tried returned an empty JSON structure. This is not uncommon when trying out a new API, so I figured the problem was with me and kept at it. Eventually, I tried searching for things other than Marvel Presents, and when I got hits on those searches, the unfortunate truth dawned on me: the Marvel database is missing lots of issues, at least from “my” era.

To confirm this, I wrote a short script to get the titles and issue numbers for everything Marvel published over a three-month period in 1975. There was plenty there—around 20 issues per month—but there was plenty missing, too. No Marvel Presents, which is why I’d come up empty in my first searches; no Marvel Treasury Editions, which were oversized books that sold for the princely sum of $1.50 each; fewer Giant-Sizes and Annuals than I remember; and no reprint titles, like Marvel Tales and Marvel’s Greatest Comics, either. The upshot of this exploratory work was that I had to abandon the idea of using the Marvel API and look elsewhere.

Which was unfortunate, because the Marvel’s documentation and API seemed pretty decent. Especially when compared with the next API I considered, Comic Vine. In a nutshell, the Comic Vine API may be well-designed and have a great database behind it, but its documentation blows. No narrative description of the purpose of the calls, no explanation of the types of values the parameters can take, and no examples so you can figure these things out for yourself. I suppose I could have spent some time trying out the calls and reverse engineering their purpose, but I was so put off by the poor excuse for documentation that I decided not to give it any more of my time.

The next stop on my API odyssey was the Grand Comics Database, which has the spectacular comics.org domain name. The GCD is crowd-sourced, and it is an amazingly deep resource. It is everything internet utopians in the 90s thought the web would become.

Unfortunately, internet utopians in the 90s didn’t think about APIs. Although the GCD is backed by a database,3 there’s no API to access it. You can, however, get a CSV file for each issue by GETting a URL of the form

https://www.comics.org/issue/<issue ID>/export_issue_csv/

where the issue ID is a unique, non-obvious number for each issue (it’s probably the primary key for the database’s issues table). And while it may seem as though getting the issue ID for each one of hundreds of comics is too much work, there is a shortcut. The GCD has pages for entire series, like this one for Marvel Presents, which include links to each individual issue. Within those links are the issue IDs. So the problem was reduced to visiting and scraping somewhere between 75 and 100 series pages. Still a fair amount of work, but each series took only a minute or two with the help of a Python scraping script tied to a Keyboard Maestro macro. (Did I mention I took some time off work during the holidays?) The result of this work was a file, GCD.csv, in which each line had the series title, issue number, and issue ID for a particular issue. The top several lines look like this:

series,number,id

Adventures on the Planet of the Apes,2,29212

Adventures on the Planet of the Apes,3,29308

Adventures on the Planet of the Apes,4,29541

Amazing Adventures,31,28767

Amazing Adventures,32,28993

Amazing Adventures,33,29213

Amazing Adventures,34,29455

Amazing Adventures,35,29638

This file was the input for another script that downloaded the CSV data for all my comics. For each issue, the CSV data consists of one line of data for the issue itself (issue number, issue date, publisher, price, page count, editor, etc.) and two or more lines for the contents of the issue. One of these content lines is for the cover (title, penciller, inker, etc.) and the rest are for the stories, letters pages, and ads within. So I wrote a script that downloaded the CSV and separated out the lines into three files: issues.csv, covers.csv, and stories.csv. I did my best to filter out the letters pages and ads.

At this point, I had enough to build a database with three tables: issues, covers, and stories. But the main attraction of comic books is their artwork, and I wanted my database to include cover images for each issue, if possible.

And it is possible, because the GCD includes cover scans. I just needed to write another web scraping script that used the issue ID from GCD.csv to find and download JPEGs for every cover in my collection. When this script was finished, I had a folder full of JPEG files, each one named for its corresponding issue ID.

With the hard part done and all the data collected, it was time to import it all into a database. My first choice was Airtable. I’ve been using it enough recently to feel comfortable with it, and it’s cloud-based, so I can access my data from any device. Why it might suddenly be necessary for me to know the inker for issue 26 of Luke Cage, Power Man I cannot tell you; but I can tell you it was Vince Colletta.

The importing of the three tables went smoothly, as did the linking of the covers and stories tables to the issues table. And it wasn’t hard to use the Airtable API to upload the cover images into the covers table. Everything was looking good until I opened the Airtable app on my phone some time after I thought I was done and noticed a red badge on top of the icon for my comic book database. Clicking the badge popped up a warning that I had exceeded Airtable’s records limit for a single database with a free account.

Remember when I said my reckoning of 250–300 comics was a significant underestimate? This is where it became significant. I actually have over 500 comics, which meant over 500 records in the issues table, the same number in the covers table, and over 900 records in the stories table. Airtable bases its record count on the sum of all the records in all the tables of a database, and the limit for a free account is 1200 records. I was way over the limit.4

I like Airtable, and I’d be willing to pay $120/year for serious use of it, but not for my comic books database. So I needed to back out of this dead end and find a new database solution.

The natural choice was FileMaker. I already have FileMaker for work—it’s run the company’s time and billing system for over 20 years—and there’s a mobile version, FileMaker Go. I needed to make a few changes in the CSV files and the JPEGs to make them compatible with FileMaker:

- FileMaker apparently can’t parse dates in

yyyy-mm-ddform (which is stupid), so I had to change the issue publication dates from that format tomm/dd/yyyy. - FileMaker wouldn’t parse cover prices like

0.25 USDas numbers, so I had to delete the non-numeric characters from that field. - FileMaker seems to honor the dpi settings in JPEG files. For reasons I cannot fathom, some of the cover images I had downloaded from the GCD had very high dpi settings, which made them appear tiny in FileMaker. I fixed that with a

sipscommand to set the dpi on all the JPEGs to 96.

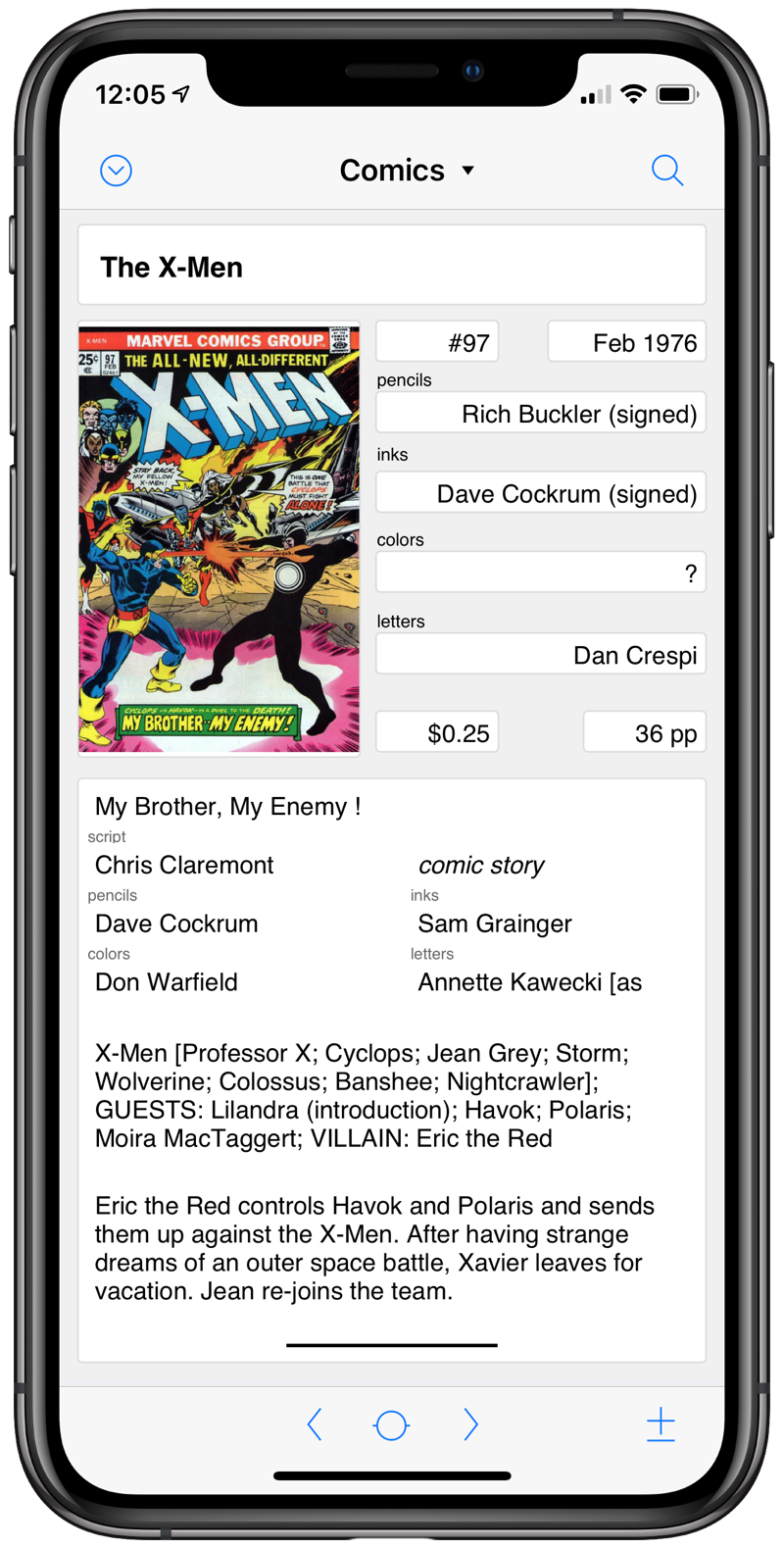

I also had to design layouts for displaying the records. This was time-consuming, as I couldn’t stop tweaking the elements’ sizes and position, but it does make for a better-looking result than I could get from Airtable.

So there’s the overview, complete with blind alleys and rabbit holes. Next time I’ll go through the scripts I wrote, ignoring all the stuff I had to throw away.

-

These were titles the Guardians appeared in when Steve Gerber and Sal Buscema resurrected them from a one-shot 1969 story by Arnold Drake and Gene Colan. I have a reprint of that story from 1975. ↩

-

That’s a foundation engineering term. My soil mechanics professors would be proud that I still remember it but disappointed that I didn’t consider it when making my estimate. ↩

-

Which you can download if you really want an enormous MySQL data dump of tens (hundreds?) of thousands of comic books. No thank you. ↩

-

Because there’s a one-to-one relationship between the issues and covers tables, I could have blended the two into a single table, eliminating over 500 records. That would get me closer, but I’d still be over the limit. ↩