Checking it twice

August 29, 2021 at 11:53 AM by Dr. Drang

Comparing lists is something I have to fairly often in my work. There are lots of ways to do it, and the techniques I’ve used have evolved considerably over the years. This post is about where I’ve been and where I am now.

First, let’s talk about the kinds of lists I deal with. They are typically sets of alphanumeric strings—serial numbers of products would be a good example. The reason I have two lists that need to be compared varies, but a common example would be one list of devices that were supposed to be collected from inventory and tested and another list of devices that actually were tested. This latter list is usually part of a larger data set that includes the test results. Before I get into analyzing the test results, I have to see whether all the devices from the first list were tested and whether some of the devices that were tested weren’t on the first list.

In the old days, the lists came on paper, and the comparison consisted of me sitting at my desk with a highlighter pen, marking off the items in each list as I found them. This is still a pretty good way of doing things when the lists are small. By having your eyes go over every item in each list, you get a good sense of the data you’ll be dealing with later.

But when the lists come to me in electronic form, the “by hand” method is less appealing, especially when the lists are dozens or hundreds of items long. This is where software tools come into play.

The first step is data cleaning, a topic I don’t want to get into in this post other than to say that the goal is to get two files, which we’ll call listA.txt and listB.txt. Each file has one item per line. How you get the lists into this state depends on the form they took when you received them, but it typically involves copying, pasting, searching, and replacing. This is where you develop your strongest love/hate relationships with spreadsheets and regular expressions.

Let’s say these are the contents of your two files: three-digit serial numbers. I’m showing them in parallel to save space in the post, but they are two separate files

listA.txt listB.txt

115 114

119 115

105 106

101 119

113 105

116 125

102 114

106 120

114 101

108 117

120 113

103 111

107 112

109 123

118 116

112 107

110 118

104 114

102

105

110

121

122

109

104

You’ll note that neither of these lists are sorted. Or at least they’re not sorted in any obvious way. They may be sorted by the date on which the device was tested, and that might be important in your later analysis, so it’s a good idea, as you go through the data cleanup, to preserve the ordering of the data as you received it.

At this stage, I typically look at each list separately and see if there’s anything weird about it. One of the most common weirdnesses is duplication, which can be found through this simple pipeline:

sort listB.txt | uniq -c | sort -r | head

The result for our data is

3 114

2 105

1 125

1 123

1 122

1 121

1 120

1 119

1 118

1 117

What this tells us is that item 114 is in list B three times, item 105 is in list B twice, and all the others are there just once. At this point, some investigation is needed. Were there actually three tests run on item 114? Did someone mistype the serial number? Was the data I was given concatenated from several redundant sources and not cleaned up before sending it to me?

The workings of the pipeline are pretty simple. The first sort alphabetizes the list, which puts all the duplicate lines adjacent to one another. The uniq command prints the list without the repeated lines, and its -c (“count”) option adds a prefix of the number times each line appears. The second sort sorts this augmented list in reverse (-r) order, so the lines that are repeated appear at the top. Finally, the head prints just the top ten lines of this last sorted list. Although head can be given an option that changes the number of lines that are printed, I seldom need to use that option, as my lists tend to have only a handful of duplicates at most.

Let’s say we’ve figured why list B had those duplicates. And we also learned that list A has no duplicates. Now it’s time to compare the two lists. I used to do this by making sorted versions of each list,

sort listA.txt > listA-sorted.txt

sort -u listB.txt > listB-sorted.txt

and then compared the -sorted files.1 This worked, but it led to cluttered folders with files that were only used once. More recently, I’ve avoided the clutter by using process substitution, a feature of the bash shell (and zsh) that’s kind of like a pipeline but can be used when you need files instead of a stream of data. We’ll see how this works later.

There are two main ways to compare files in Unix: diff and comm. diff is the more programmery way of comparison. It tells you not only what the differences are between the files, but also how to edit one file to make it the same as the other. That’s useful, and we certainly could use diff to do our comparison, but it’s both more verbose and more cryptic than we need.

The comm command takes two arguments, the files we want to compare. By default, it prints three columns of output: the first column consists of lines in the first file that don’t appear in the second; the second column consists of lines that appear in the second file but not in the first; and the third column is the lines that appear in both files. Like this:

comm listA-sorted.txt listB-sorted.txt

gives output of

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

125

(The columns are separated by tab characters, but I’ve converted them to spaces here to make the output look more like what you’d see in the Terminal.)

This is typically more information than I want. In particular, the third column is redundant. If we know which items are only in list A and which items are only in list B, we know that all the others (usually the most numerous by far) are in both lists. We can suppress the printing of column 3 with the -3 option:

comm -3 listA-sorted.txt listB-sorted.txt

gives the more compact output

103

108

111

117

121

122

123

125

You can suppress the printing of the other columns with -1 and -2. So to get the items that are unique to list A, we do

comm -23 listA-sorted.txt listB-sorted.txt

There are a couple of things I hate about comm:

- What’s up with that second m? This is a comparison program, not a communication program.

- The options are given in what my dad would call “yes, we have no bananas” form.2 You don’t tell

commthe columns you want, you tell it the columns you don’t want. Very intuitive.

I said earlier that I don’t like making -sorted files. Now that we see how comm works with files, we’ll switch to process substitution. Here’s how to get the items unique to each list without creating sorted files first:

comm -3 <(sort listA.txt) <(sort -u listB.txt)

What goes inside the <() construct is the command we’d use to create the sorted file. I like this syntax, as the less-than symbol is reminiscent of the symbol used for redirecting input.

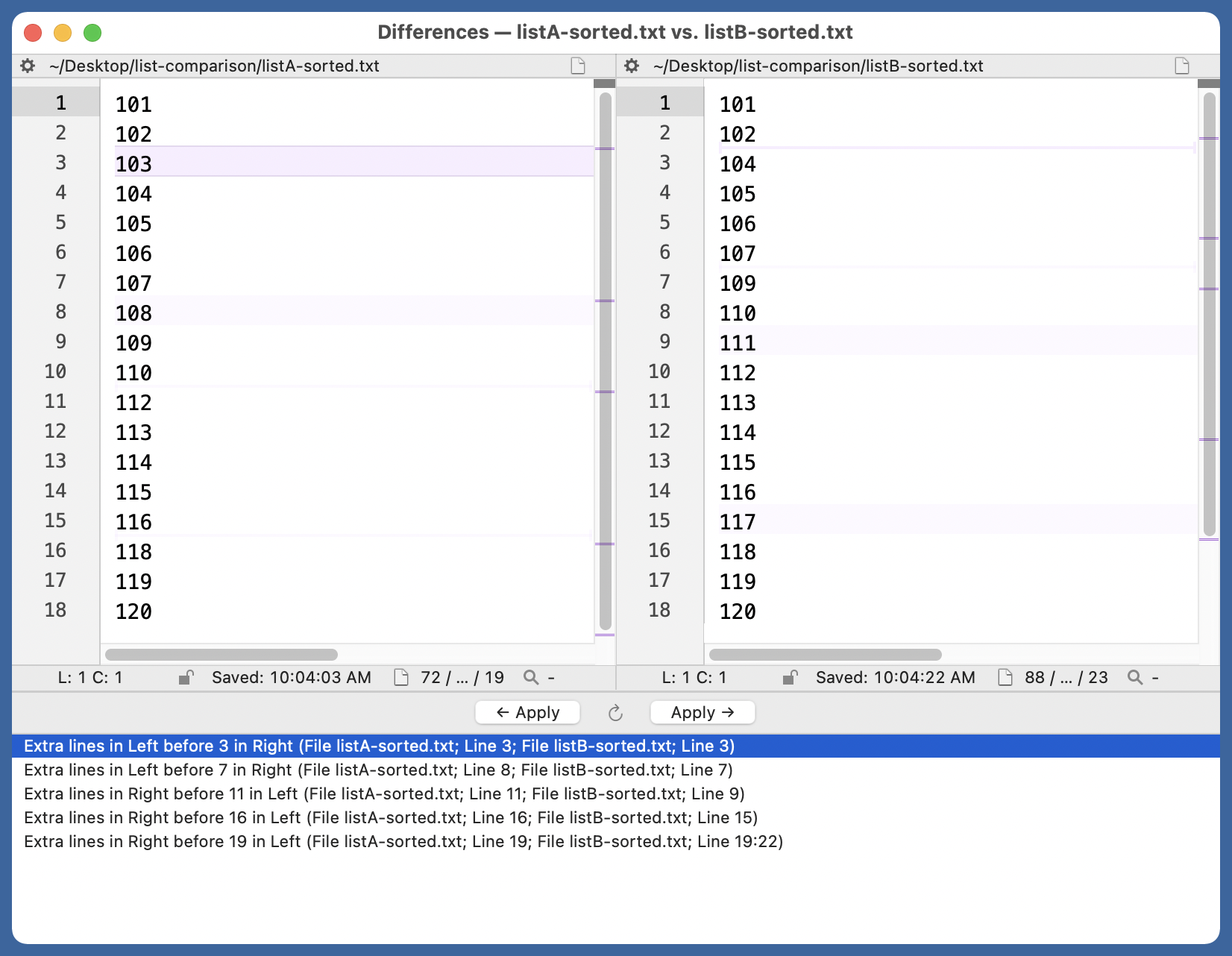

You may prefer using your text editor’s diffing system because it’s more visual. Here’s BBEdit’s difference window:

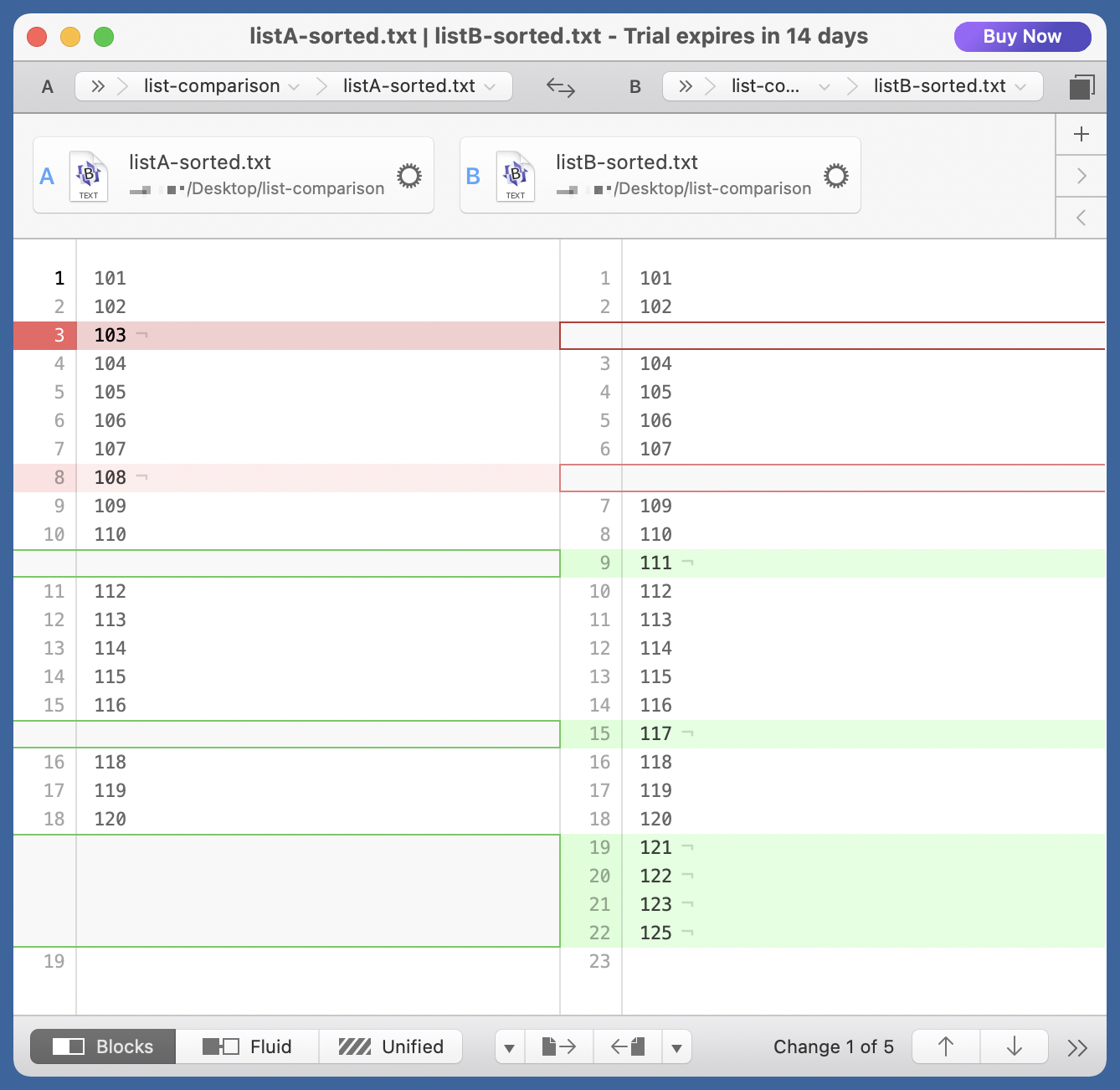

Here’s Kaleidoscope, which gets good reviews as a file comparison tool. I don’t own it but downloaded the free trial so I could get this screenshot.

These are both nice, but they don’t give you output you can paste directly into a report or email.

The following items were supposed to be tested, but there are no results for them:

[paste]

What happened?

Update 08/31/2021 6:05 PM

Reader Geoff Tench emailed to tell me that comm is short for common, and he pointed to the first line of the man page:

comm — select or reject lines common to two files

This is what you see when you type man comm in the Terminal. The online man page I linked to, at ss64.com, is slightly different:

Compare two sorted files line by line. Output the lines that are common, plus the lines that are unique.

I focused on the compare and missed the common completely.

So comm is a good abbreviation, but it’s a good abbreviation to a poor description. By default, comm outputs three columns of text, and only one of those columns has the common lines. So comm is really more about unique lines than it is about common lines. Of course, uniq is taken, as are diff and cmp. And comp would probably be a bad name, given that cmp exists.

diff is such a good command name, I often use it by mistake when I really mean to use comm. Now that I’ve written this exegesis of comm, I’ll probably find it easier to remember. Thanks, Geoff!

-

The

-uoption tosortmakes it act likesort <file> | uniq. It strips out the duplicates, which we don’t want in the file when doing our comparisons. ↩ -

My father wasn’t a programmer, but it’s not unusual to be asked to provide negative information. Tax forms, for example: All income not included in Lines 5, 9, and 10e that weren’t in the years in which you weren’t a resident of the state. ↩