Consecutive heads or tails

November 11, 2023 at 8:26 PM by Dr. Drang

Earlier today, I talked about sticking with a problem longer than I probably should because I can’t stop. Let’s apply that pathology to the Taskmaster coin flip subtask and think about a slightly different problem.1 Suppose we flip a fair coin and stop when we’ve flipped either five consecutive heads or five consecutive tails. What is the expected number of flips?

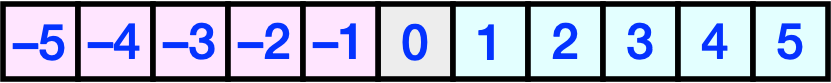

As we did with the simpler game, we can imagine this as a board game to help us configure the Markov chain transition matrix. Here, we treat consecutive heads as positives and consecutive tails as negatives.

Update 11 Nov 2023 11:12 PM

I screwed this up the first time through and should’ve seen the error before I published. The transformation matrices are correct now and give a result that makes more sense. Sorry about that.

We start with our marker on Square 0 and start flipping. A head moves us one square to the right; a tail moves us one square to the left. If we’re on a positive square, a tail takes to Square –1. If we’re on a negative square, a head takes us Square 1. The game ends when we reach either Square 5 or Square –5.

Here’s the game’s transformation matrix, where we’ve set the squares at the two ends of the board to be absorbing states:

The rows and columns represent the squares in numerical order from –5 to 5. The lower right half is similar to the transformation matrix we used in the earlier post, and the upper left half is sort of the upside-down mirror image of the lower right half. The main difference is that we never go to Square 0 after a flip; if the coin comes up opposite the run we were on, we go to the first square on the opposite side of Square 0.

As we’ve done in the past, we create a transformation matrix by eliminating the rows and columns associated with the absorbing states from the matrix:

Then we proceed as before, forming the matrix equation

where is the column vector of the expected number of flips to get to an absorbing state from Squares –4 through 4, and is a nine-element column vector of ones. Solving this2 we get

So the expected number of flips to get either five consecutive heads or five consecutive tails is , the value in the middle of this vector. This is half the value we got last time, which makes sense.

Am I done with Markov chains now? I hope so, but you never know.

-

By the way, you can now see the whole episode on YouTube. ↩

-

Which I did with Mathematica, but you could do with any number of programs. Excel, for example. ↩