A few text utilities

January 10, 2021 at 11:51 AM by Dr. Drang

If anything can stir me from a blogging lull, it’s a Jason Snell post about one of my favorite programs, BBEdit. And when responding to his post allows me to talk about Unix text processing utilities along with BBEdit, well, strap in.

Jason uses a particular example—collecting and counting listener survey responses to use on The Incomparable’s Family Feud-style Game Show episodes—to explain some of BBEdit’s powerful text filtering commands. He extracts, counts, sorts, and reformats, all within the friendly confines of BBEdit, where every step you take is both visible (to check if you’ve done it right) and reversible (in case you haven’t).

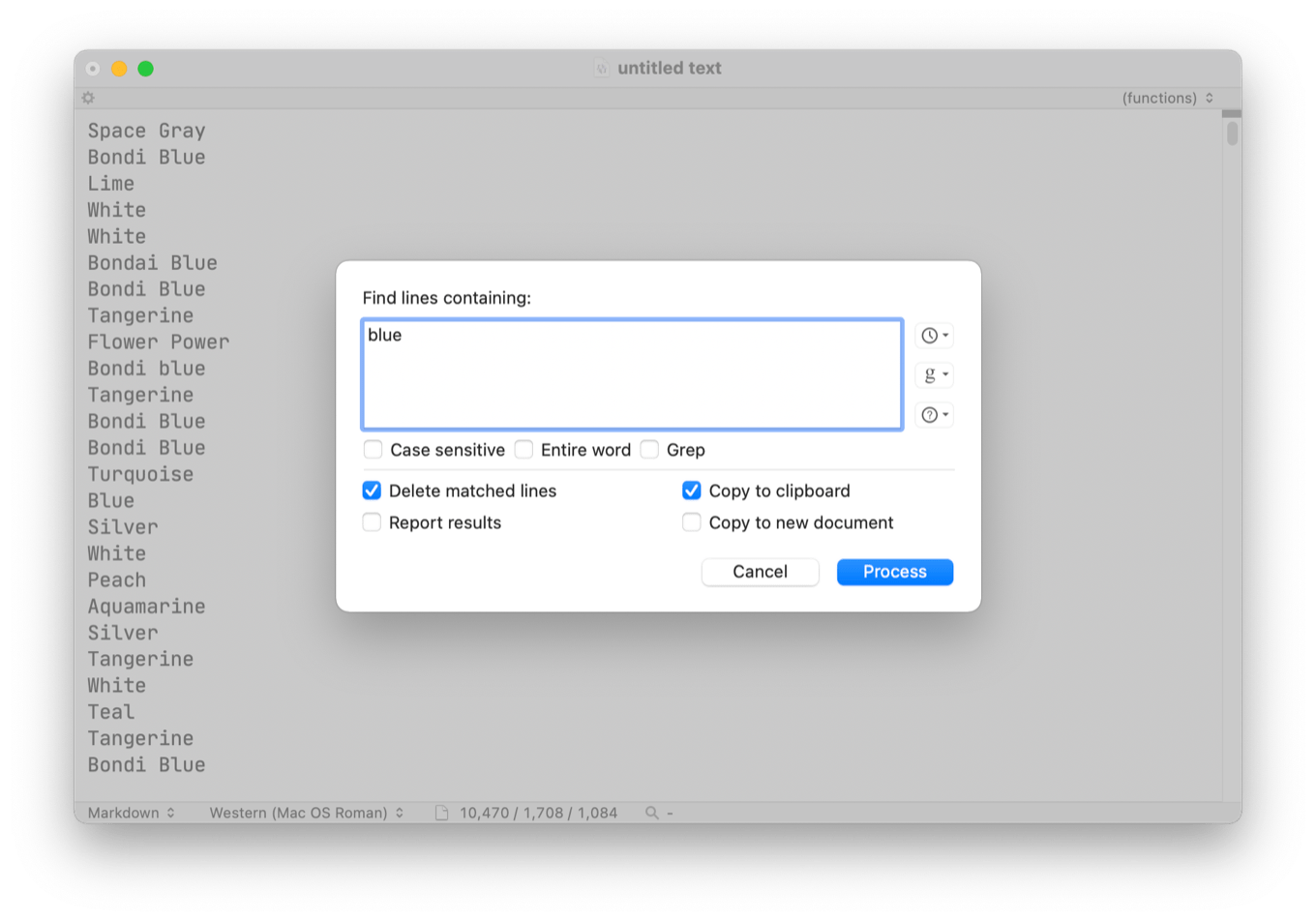

One of the cleverest things Jason does, which I think he undersells, is nibble away at the dataset as he processes it. In this screenshot

we see that “Delete matched lines” is checked. By deleting each set of entries as he finds them, he makes it easier to develop the criteria for finding the next set. And with “Copy to clipboard” checked, he hasn’t lost the entries he’s just found—they’re ready to be pasted into a new document for checking and counting. This nibbling technique is one I’ve never used but will keep in mind the next time I’m faced with this type of problem.

Most of the text analysis I do is, thankfully, easier than Jason’s. When I’m counting up lines of text based on certain criteria, I’m usually working with machine-made text: log files, EXIF data from a folder of photos, output from a data acquisition system. These files don’t have misspellings, typos, or inconsistent capitalization and can usually be processed in one fell swoop. My model for processing this kind of data is Doug McIlroy’s famous six-line shell script.

To make an example that parallels the data in Jason’s post, I generated a 100-line text file with random selections from the thirteen colors of the iMac G3. The first ten lines look like this:

Indigo

Blue Dalmation

Graphite

Snow

Bondi Blue

Graphite

Ruby

Indigo

Ruby

Graphite

I named the file cancolors.txt because all the colors are in their canonical form, with no deviations in spelling or capitalization.

Following McIlroy’s lead, I can count the entries and sort by popularity with this simple shell pipeline,

sort cancolors.txt | uniq -c | sort -nr

which gives me this output:

16 Bondi Blue

13 Tangerine

12 Graphite

11 Snow

11 Ruby

8 Indigo

7 Sage

5 Strawberry

5 Blueberry

4 Grape

3 Lime

3 Flower Power

2 Blue Dalmation

(When I said the colors were chosen randomly, I didn’t mean they were chosen randomly with the same probability. I’ll write a short post later about how I generated the list and weighted the entries.)

The first sort command reads the lines of cancolors.txt and sorts them in alphabetical order (without changing cancolors.txt itself). The sorted lines are piped to uniq, which reduces identical adjacent lines to single lines and prints them. The -c option tells uniq to precede each line with the number of times it was repeated.1 Finally, the second sort sorts the output of uniq, where the -n option tells it to sort numerically instead of alphabetically, and the -r option tells it to reverse the order.

Depending on what I need to do, I could use this output as is or process it further. If I wanted to pop it into two columns of a spreadsheet, as Jason does, I could either put the output into BBEdit and process it there through Find/Replace or just continue the shell pipeline:

sort cancolors.txt | uniq -c | sort -nr | perl -ple 's/^ +(\d+) (.+)/$2\t\$1' | pbcopy

The perl command gets rid of leading spaces, reverses the order of the number and the color, and puts a tab character between them. The pbcopy puts the result onto the clipboard, ready for pasting into a spreadsheet.

This is undoubtedly much faster than Jason’s method, but that’s only because the color names were in canonical form before I started. What if the dataset comes from humans instead of machines and contains inconsistencies?

I do often get that kind of data at work. Usually, these are inspection notes, where different inspectors—including me—use inconsistent terms and capitalization while reviewing or measuring different parts of a building or machine. In these cases, I usually correct the data before processing, creating a new input file with consistent spelling and capitalization that I can process as above. The tools I use to correct the data depend on the situation but usually include some combination of command-line utilities, BBEdit, and Numbers.

As an example, I’ve introduced random typos into the file of color names. This noncanonical file is called colors.txt and has lines like this:

Ruky

Ruby

Grapbitw

Grape

Bondi Blue

Bindi Blue

Ruby

Bondi Blue

Blueberry

Snof

If there are just a few typos, I can open this file in BBEdit, sort the lines, and scroll through it. When the file is sorted, typos are usually easy to find and fix. A way to see all the mistakes at once is to use this pipeline at the Terminal,

sort colors.txt | uniq

which will give an output like this:

Bindi Blue

Blfeberry

Blue Dalmation

Bluebehry

Blueberry

Bondi Blue

Flower Power

Gaaphite

Graie

Grapbitw

Grape

Graphite

Grsphite

Indigo

Lime

Rubv

Ruby

Ruky

Ruly

Sage

Snof

Snow

Strawbekry

Strawberry

Tangerane

Tangerine

Tanlerine

This reduces the correct spellings down to a single line and shows all the typos, usually adjacent to the correct spellings.

When there are more than a handful of typos, I usually copy all the sorted lines from BBEdit and paste them into an empty spreadsheet in Numbers for editing.2 The advantage of making the corrections in Numbers is that it’s easy to select several rows at once—including both correct and incorrect spellings—and enter the correct spelling with a single Paste. With the corrections made, I paste the text back into BBEdit.

I should mention that recent versions of BBEdit have made correcting several lines at once easier by allowing rectangular selection even when is turned on (my default). The and commands are also very helpful in bringing lines together for correction in a single step. Still, I tend to use Numbers when there are lots of corrections to make; it’s a longstanding habit.3

I save the corrected data to a new file and run the McIlroy-style pipeline on it. This is the kind of approach to a problem you learn in math class: reduce the new problem to one that’s already been solved. I don’t know if it ends up being faster than what Jason does, but it’s what I’m comfortable with.

-

The

-cmeans “count.” I can’t tell you how many times I’ve mistakenly written the command with an-n(for “number”) option. Fortunately, there is no-noption, and I get an error instead of an incorrect result. ↩ -

Excel would be fine for this, too, but since Excel takes a day and a half to open, I usually use Numbers. ↩

-

Bouncing back and forth between a text editor and a spreadsheet is hardly original with me. Although I didn’t learn it from them, John Gruber and Jason talked about doing this in an episode of The Talk Show a few years ago. I’d give you a link, but I couldn’t identify the show from the descriptions in the episode list. ↩